diff --git a/.gitignore b/.gitignore

index 53253b95b3409..c3ef9719143fd 100644

--- a/.gitignore

+++ b/.gitignore

@@ -79,3 +79,6 @@ doc-tools/missing-doclet/bin/

/modules/parquet-data-format/src/main/rust/target

/modules/parquet-data-format/src/main/resources/native/

+/plugins/dataformat-csv/jni/target

+/plugins/dataformat-csv/jni/Cargo.lock

+

diff --git a/.idea/runConfigurations/Debug_OpenSearch.xml b/.idea/runConfigurations/Debug_OpenSearch.xml

index fddcf47728460..c18046f873477 100644

--- a/.idea/runConfigurations/Debug_OpenSearch.xml

+++ b/.idea/runConfigurations/Debug_OpenSearch.xml

@@ -1,11 +1,15 @@

-

-

-

-

-

-

-

-

-

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/modules/parquet-data-format/.whitesource b/modules/parquet-data-format/.whitesource

new file mode 100644

index 0000000000000..bb071b4a2b1ce

--- /dev/null

+++ b/modules/parquet-data-format/.whitesource

@@ -0,0 +1,45 @@

+{

+ "scanSettings": {

+ "configMode": "AUTO",

+ "configExternalURL": "",

+ "projectToken": "",

+ "baseBranches": []

+ },

+ "scanSettingsSAST": {

+ "enableScan": false,

+ "scanPullRequests": false,

+ "incrementalScan": true,

+ "baseBranches": [],

+ "snippetSize": 10

+ },

+ "checkRunSettings": {

+ "vulnerableCheckRunConclusionLevel": "failure",

+ "displayMode": "diff",

+ "useMendCheckNames": true

+ },

+ "checkRunSettingsSAST": {

+ "checkRunConclusionLevel": "failure",

+ "severityThreshold": "high"

+ },

+ "issueSettings": {

+ "minSeverityLevel": "LOW",

+ "issueType": "DEPENDENCY"

+ },

+ "issueSettingsSAST": {

+ "minSeverityLevel": "high",

+ "issueType": "repo"

+ },

+ "remediateSettings": {

+ "workflowRules": {

+ "enabled": true

+ }

+ },

+ "imageSettings":{

+ "imageTracing":{

+ "enableImageTracingPR": false,

+ "addRepositoryCoordinate": false,

+ "addDockerfilePath": false,

+ "addMendIdentifier": false

+ }

+ }

+}

\ No newline at end of file

diff --git a/modules/parquet-data-format/CONTRIBUTING.md b/modules/parquet-data-format/CONTRIBUTING.md

new file mode 100644

index 0000000000000..95baabd2bef24

--- /dev/null

+++ b/modules/parquet-data-format/CONTRIBUTING.md

@@ -0,0 +1,115 @@

+# Contributing Guidelines

+

+Thank you for your interest in contributing to our project. Whether it's a bug report, new feature, correction, or additional

+documentation, we greatly value feedback and contributions from our community.

+

+Please read through this document before submitting any issues or pull requests to ensure we have all the necessary

+information to effectively respond to your bug report or contribution.

+

+

+## Reporting Bugs/Feature Requests

+

+We welcome you to use the GitHub issue tracker to report bugs or suggest features.

+

+When filing an issue, please check existing open, or recently closed, issues to make sure somebody else hasn't already

+reported the issue. Please try to include as much information as you can. Details like these are incredibly useful:

+

+* A reproducible test case or series of steps

+* The version of our code being used

+* Any modifications you've made relevant to the bug

+* Anything unusual about your environment or deployment

+

+

+## Contributing via Pull Requests

+Contributions via pull requests are much appreciated. Before sending us a pull request, please ensure that:

+

+1. You are working against the latest source on the *main* branch.

+2. You check existing open, and recently merged, pull requests to make sure someone else hasn't addressed the problem already.

+3. You open an issue to discuss any significant work - we would hate for your time to be wasted.

+

+To send us a pull request, please:

+

+1. Fork the repository.

+2. Modify the source; please focus on the specific change you are contributing. If you also reformat all the code, it will be hard for us to focus on your change.

+3. Ensure local tests pass.

+4. Commit to your fork using clear commit messages.

+5. Send us a pull request, answering any default questions in the pull request interface.

+6. Pay attention to any automated CI failures reported in the pull request, and stay involved in the conversation.

+

+GitHub provides additional document on [forking a repository](https://help.github.com/articles/fork-a-repo/) and

+[creating a pull request](https://help.github.com/articles/creating-a-pull-request/).

+

+## Developer Certificate of Origin

+

+OpenSearch is an open source product released under the Apache 2.0 license (see either [the Apache site](https://www.apache.org/licenses/LICENSE-2.0) or the [LICENSE.txt file](LICENSE.txt)). The Apache 2.0 license allows you to freely use, modify, distribute, and sell your own products that include Apache 2.0 licensed software.

+

+We respect intellectual property rights of others and we want to make sure all incoming contributions are correctly attributed and licensed. A Developer Certificate of Origin (DCO) is a lightweight mechanism to do that.

+

+The DCO is a declaration attached to every contribution made by every developer. In the commit message of the contribution, the developer simply adds a `Signed-off-by` statement and thereby agrees to the DCO, which you can find below or at [DeveloperCertificate.org](http://developercertificate.org/).

+

+```

+Developer's Certificate of Origin 1.1

+

+By making a contribution to this project, I certify that:

+

+(a) The contribution was created in whole or in part by me and I

+ have the right to submit it under the open source license

+ indicated in the file; or

+

+(b) The contribution is based upon previous work that, to the

+ best of my knowledge, is covered under an appropriate open

+ source license and I have the right under that license to

+ submit that work with modifications, whether created in whole

+ or in part by me, under the same open source license (unless

+ I am permitted to submit under a different license), as

+ Indicated in the file; or

+

+(c) The contribution was provided directly to me by some other

+ person who certified (a), (b) or (c) and I have not modified

+ it.

+

+(d) I understand and agree that this project and the contribution

+ are public and that a record of the contribution (including

+ all personal information I submit with it, including my

+ sign-off) is maintained indefinitely and may be redistributed

+ consistent with this project or the open source license(s)

+ involved.

+ ```

+

+We require that every contribution to OpenSearch is signed with a Developer Certificate of Origin. Additionally, please use your real name. We do not accept anonymous contributors nor those utilizing pseudonyms.

+

+Each commit must include a DCO which looks like this

+

+```

+Signed-off-by: Jane Smith

+```

+

+You may type this line on your own when writing your commit messages. However, if your user.name and user.email are set in your git configs, you can use `-s` or `– – signoff` to add the `Signed-off-by` line to the end of the commit message.

+

+## Finding contributions to work on

+Looking at the existing issues is a great way to find something to contribute on. As our projects, by default, use the default GitHub issue labels (enhancement/bug/duplicate/help wanted/invalid/question/wontfix), looking at any 'help wanted' issues is a great place to start.

+

+

+## Code of Conduct

+This project has adopted the [Amazon Open Source Code of Conduct](https://aws.github.io/code-of-conduct).

+For more information see the [Code of Conduct FAQ](https://aws.github.io/code-of-conduct-faq) or contact

+opensource-codeofconduct@amazon.com with any additional questions or comments.

+

+

+## Security issue notifications

+If you discover a potential security issue in this project we ask that you notify AWS/Amazon Security via our [vulnerability reporting page](http://aws.amazon.com/security/vulnerability-reporting/). Please do **not** create a public github issue.

+

+## License Headers

+

+New files in your code contributions should contain the following license header. If you are modifying existing files with license headers, or including new files that already have license headers, do not remove or modify them without guidance.

+

+```

+/*

+ * Copyright OpenSearch Contributors

+ * SPDX-License-Identifier: Apache-2.0

+ */

+```

+

+## Licensing

+

+See the [LICENSE](LICENSE.txt) file for our project's licensing. We will ask you to confirm the licensing of your contribution.

diff --git a/modules/parquet-data-format/LICENSE.txt b/modules/parquet-data-format/LICENSE.txt

new file mode 100644

index 0000000000000..261eeb9e9f8b2

--- /dev/null

+++ b/modules/parquet-data-format/LICENSE.txt

@@ -0,0 +1,201 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright [yyyy] [name of copyright owner]

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/modules/parquet-data-format/MAINTAINERS.md b/modules/parquet-data-format/MAINTAINERS.md

new file mode 100644

index 0000000000000..2e99a453438ac

--- /dev/null

+++ b/modules/parquet-data-format/MAINTAINERS.md

@@ -0,0 +1,12 @@

+## Overview

+

+This document contains a list of maintainers in this repo. See [opensearch-project/.github/RESPONSIBILITIES.md](https://github.com/opensearch-project/.github/blob/main/RESPONSIBILITIES.md#maintainer-responsibilities) that explains what the role of maintainer means, what maintainers do in this and other repos, and how they should be doing it. If you're interested in contributing, and becoming a maintainer, see [CONTRIBUTING](CONTRIBUTING.md).

+

+## Current Maintainers

+

+| Maintainer | GitHub ID | Affiliation |

+| ------------------------ | ------------------------------------------------------- | ----------- |

+| Amitai Stern | [AmiStrn](https://github.com/AmiStrn) | Independent |

+| Daniel "dB." Doubrovkine | [dblock](https://github.com/dblock) | Independent |

+| Sarat Vemulapalli | [saratvemulapalli](https://github.com/saratvemulapalli) | Amazon |

+| Andriy Redko | [reta](https://github.com/reta) | Independent |

diff --git a/modules/parquet-data-format/NOTICE.txt b/modules/parquet-data-format/NOTICE.txt

new file mode 100644

index 0000000000000..731cb60065bc3

--- /dev/null

+++ b/modules/parquet-data-format/NOTICE.txt

@@ -0,0 +1,2 @@

+OpenSearch (https://opensearch.org/)

+Copyright OpenSearch Contributors

diff --git a/modules/parquet-data-format/README.md b/modules/parquet-data-format/README.md

new file mode 100644

index 0000000000000..1ed47651ec2a2

--- /dev/null

+++ b/modules/parquet-data-format/README.md

@@ -0,0 +1,221 @@

+# Template for creating OpenSearch Plugins

+This Repo is a GitHub Template repository ([Learn more about that](https://docs.github.com/articles/creating-a-repository-from-a-template/)).

+Using it would create a new repo that is the boilerplate code required for an [OpenSearch Plugin](https://opensearch.org/blog/technical-posts/2021/06/my-first-steps-in-opensearch-plugins/).

+This plugin on its own would not add any functionality to OpenSearch, but it is still ready to be installed.

+It comes packaged with:

+ - Integration tests of two types: Yaml and IntegTest.

+ - Empty unit tests file

+ - Notice and License files (Apache License, Version 2.0)

+ - A `build.gradle` file supporting this template's current state.

+

+---

+---

+1. [Create your plugin repo using this template](#create-your-plugin-repo-using-this-template)

+ - [Official plugins](#official-plugins)

+ - [Thirdparty plugins](#thirdparty-plugins)

+2. [Fix up the template to match your new plugin requirements](#fix-up-the-template-to-match-your-new-plugin-requirements)

+ - [Plugin Name](#plugin-name)

+ - [Plugin Path](#plugin-path)

+ - [Update the `build.gradle` file](#update-the-buildgradle-file)

+ - [Update the tests](#update-the-tests)

+ - [Running the tests](#running-the-tests)

+ - [Running testClusters with the plugin installed](#running-testclusters-with-the-plugin-installed)

+ - [Cleanup template code](#cleanup-template-code)

+ - [Editing the CI workflow](#Editing-the-CI-workflow)

+3. [License](#license)

+4. [Copyright](#copyright)

+---

+---

+

+## Create your plugin repo using this template

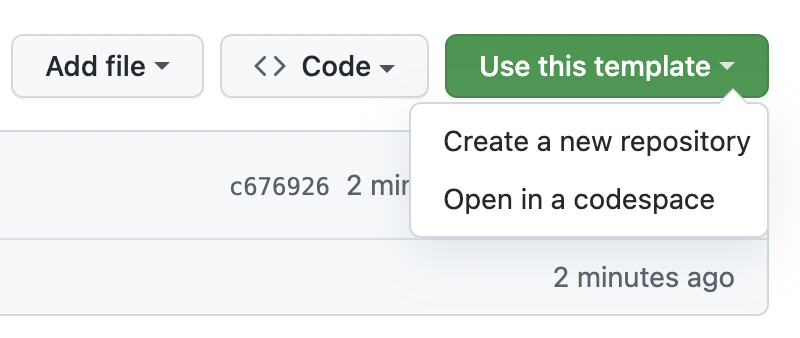

+Click on "Use this Template"

+

+

+

+Name the repository, and provide a description.

+

+Depending on the plugin relationship with the OpenSearch organization we currently recommend the following naming conventions and optional follow-up checks:

+

+### Official plugins

+

+For the **official plugins** that live within the OpenSearch organization (i.e. they are included in [OpenSearch/plugins/](https://github.com/opensearch-project/OpenSearch/tree/main/plugins) or [OpenSearch/modules/](https://github.com/opensearch-project/OpenSearch/tree/main/modules) folder), and **which share the same release cycle as OpenSearch** itself:

+

+- Do not include the word `plugin` in the repo name (e.g. [job-scheduler](https://github.com/opensearch-project/job-scheduler))

+- Use lowercase repo names

+- Use spinal case for repo names (e.g. [job-scheduler](https://github.com/opensearch-project/job-scheduler))

+- Do not include the word `OpenSearch` or `OpenSearch Dashboards` in the repo name

+- Provide a meaningful description, e.g. `An OpenSearch Dashboards plugin to perform real-time and historical anomaly detection on OpenSearch data`.

+

+### Thirdparty plugins

+

+For the **3rd party plugins** that are maintained as independent projects in separate GitHub repositories **with their own release cycles** the recommended naming convention should follow the same rules as official plugins with some exceptions and few follow-up checks:

+

+- Inclusion of the words like `OpenSearch` or `OpenSearch Dashboard` (and in reasonable cases even `plugin`) are welcome because they can increase the chance of discoverability of the repository

+- Check the plugin versioning policy is documented and help users know which versions of the plugin are compatible and recommended for specific versions of OpenSearch

+- Review [CONTRIBUTING.md](CONTRIBUTING.md) document which is by default tailored to the needs of Amazon Web Services developer teams. You might want to update or further customize specific parts related to:

+ - **Code of Conduct** (if you do not already have CoC policy then there are several options to start with, such as [Contributor Covenant](https://www.contributor-covenant.org/)),

+ - **Security Policy** (you should let users know how they can safely report security vulnerabilities),

+ - Check if you need explicit part about **Trademarks and Attributions** (if you use any registered or non-registered trademarks we recommend following applicable "trademark-use" documents provided by respective trademark owners)

+

+## Fix up the template to match your new plugin requirements

+

+This is the file tree structure of the source code, as you can see there are some things you will want to change.

+

+```

+`-- src

+ |-- main

+ | `-- java

+ | `-- org

+ | `-- example

+ | `-- path

+ | `-- to

+ | `-- plugin

+ | `-- RenamePlugin.java

+ |-- test

+ | `-- java

+ | `-- org

+ | `-- example

+ | `-- path

+ | `-- to

+ | `-- plugin

+ | |-- RenamePluginIT.java

+ | `-- RenameTests.java

+ `-- yamlRestTest

+ |-- java

+ | `-- org

+ | `-- example

+ | `-- path

+ | `-- to

+ | `-- plugin

+ | `-- RenameClientYamlTestSuiteIT.java

+ `-- resources

+ `-- rest-api-spec

+ `-- test

+ `-- 10_basic.yml

+

+```

+

+### Plugin Name

+Now that you have named the repo, you can change the plugin class `RenamePlugin.java` to have a meaningful name, keeping the `Plugin` suffix.

+Change `RenamePluginIT.java`, `RenameTests.java`, and `RenameClientYamlTestSuiteIT.java` accordingly, keeping the `PluginIT`, `Tests`, and `ClientYamlTestSuiteIT` suffixes.

+

+### Plugin Path

+You will need to change these paths in the source tree:

+

+1) Package Path

+ ```

+ `-- org

+ `-- example

+ ```

+ Let's call this our *package path*. In Java, package naming convention is to use a domain name in order to create a unique package name.

+ This is normally your organization's domain.

+

+2) Plugin Path

+ ```

+ `-- path

+ `-- to

+ `-- plugin

+ ```

+ Let's call this our *plugin path*, as the plugin class would be installed in OpenSearch under that path.

+ This can be an existing path in OpenSearch, or it can be a unique path for your plugin. We recommend changing it to something meaningful.

+

+3) Change all these path occurrences to match your chosen path and naming by following [this](#update-the-buildgradle-file) section

+

+### Update the `build.gradle` file

+

+Update the following section, using the new repository name and description, plugin class name, package and plugin paths:

+

+```

+def pluginName = 'rename' // Can be the same as new repo name except including words `plugin` or `OpenSearch` is discouraged

+def pluginDescription = 'Custom plugin' // Can be same as new repo description

+def packagePath = 'org.example' // The package name for your plugin (convention is to use your organization's domain name)

+def pathToPlugin = 'path.to.plugin' // The path you chose for the plugin

+def pluginClassName = 'RenamePlugin' // The plugin class name

+```

+

+Next update the version of OpenSearch you want the plugin to be installed into. Change the following param:

+```

+ ext {

+ opensearch_version = "1.0.0-beta1" // <-- change this to the version your plugin requires

+ }

+```

+

+- Run `./gradlew preparePluginPathDirs` in the terminal

+- Move the java classes into the new directories (will require to edit the `package` name in the files as well)

+- Delete the old directories (the `org.example` directories)

+

+### Update the tests

+Notice that in the tests we are checking that the plugin was installed by sending a GET `/_cat/plugins` request to the cluster and expecting `rename` to be in the response.

+In order for the tests to pass you must change `rename` in `RenamePluginIT.java` and in `10_basic.yml` to be the `pluginName` you defined in the `build.gradle` file in the previous section.

+

+### Running the tests

+You may need to install OpenSearch and build a local artifact for the integration tests and build tools ([Learn more here](https://github.com/opensearch-project/opensearch-plugins/blob/main/BUILDING.md)):

+

+```

+~/OpenSearch (main)> git checkout 1.0.0-beta1 -b beta1-release

+~/OpenSearch (main)> ./gradlew publishToMavenLocal -Dbuild.version_qualifier=beta1 -Dbuild.snapshot=false

+```

+

+Now you can run all the tests like so:

+```

+./gradlew check

+```

+

+### Running testClusters with the plugin installed

+```

+./gradlew run

+```

+

+Then you can see that your plugin has been installed by running:

+```

+curl -XGET 'localhost:9200/_cat/plugins'

+```

+

+### Cleanup template code

+- You can now delete the unused paths - `path/to/plugin`.

+- Remove this from the `build.gradle`:

+

+```

+tasks.register("preparePluginPathDirs") {

+ mustRunAfter clean

+ doLast {

+ def newPath = pathToPlugin.replace(".", "/")

+ mkdir "src/main/java/$packagePath/$newPath"

+ mkdir "src/test/java/$packagePath/$newPath"

+ mkdir "src/yamlRestTest/java/$packagePath/$newPath"

+ }

+}

+```

+

+- Last but not least, add your own `README.md` instead of this one

+

+### Editing the CI workflow

+You may want to edit the CI of your new repo.

+

+In your new GitHub repo, head over to `.github/workflows/CI.yml`. This file describes the workflow for testing new push or pull-request actions on the repo.

+Currently, it is configured to build the plugin and run all the tests in it.

+

+You may need to alter the dependencies required by your new plugin.

+Also, the **OpenSearch version** in the `Build OpenSearch` and in the `Build and Run Tests` steps should match your plugins version in the `build.gradle` file.

+

+To view more complex CI examples you may want to checkout the workflows in official OpenSearch plugins, such as [anomaly-detection](https://github.com/opensearch-project/anomaly-detection/blob/main/.github/workflows/test_build_multi_platform.yml).

+

+## Your Plugin's License

+Source code files in this template contains the following header:

+```

+/*

+* SPDX-License-Identifier: Apache-2.0

+*

+* The OpenSearch Contributors require contributions made to

+* this file be licensed under the Apache-2.0 license or a

+* compatible open source license.

+ */

+```

+This plugin template is indeed open-sourced while you might choose to use it to create a proprietary plugin.

+Be sure to update your plugin to meet any licensing requirements you may be subject to.

+

+## License

+This code is licensed under the Apache 2.0 License. See [LICENSE.txt](LICENSE.txt).

+

+## Copyright

+Copyright OpenSearch Contributors. See [NOTICE](NOTICE.txt) for details.

diff --git a/modules/parquet-data-format/src/main/resources/native/macos/aarch64/libparquet_dataformat_jni.d b/modules/parquet-data-format/src/main/resources/native/macos/aarch64/libparquet_dataformat_jni.d

new file mode 100644

index 0000000000000..13c61a20e25f8

--- /dev/null

+++ b/modules/parquet-data-format/src/main/resources/native/macos/aarch64/libparquet_dataformat_jni.d

@@ -0,0 +1 @@

+/Users/abandeji/Public/workplace/OpenSearch/modules/parquet-data-format/src/main/rust/target/release/libparquet_dataformat_jni.dylib: /Users/abandeji/Public/workplace/OpenSearch/modules/parquet-data-format/src/main/rust/src/lib.rs

diff --git a/modules/parquet-data-format/src/main/resources/native/macos/aarch64/libparquet_dataformat_jni.dylib b/modules/parquet-data-format/src/main/resources/native/macos/aarch64/libparquet_dataformat_jni.dylib

new file mode 100644

index 0000000000000..1609ae921c5fd

Binary files /dev/null and b/modules/parquet-data-format/src/main/resources/native/macos/aarch64/libparquet_dataformat_jni.dylib differ

diff --git a/modules/parquet-data-format/src/main/rust/Cargo.lock b/modules/parquet-data-format/src/main/rust/Cargo.lock

new file mode 100644

index 0000000000000..5193e16b8f26e

--- /dev/null

+++ b/modules/parquet-data-format/src/main/rust/Cargo.lock

@@ -0,0 +1,1530 @@

+# This file is automatically @generated by Cargo.

+# It is not intended for manual editing.

+version = 4

+

+[[package]]

+name = "adler2"

+version = "2.0.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "320119579fcad9c21884f5c4861d16174d0e06250625266f50fe6898340abefa"

+

+[[package]]

+name = "ahash"

+version = "0.8.12"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "5a15f179cd60c4584b8a8c596927aadc462e27f2ca70c04e0071964a73ba7a75"

+dependencies = [

+ "cfg-if",

+ "const-random",

+ "getrandom 0.3.3",

+ "once_cell",

+ "version_check",

+ "zerocopy",

+]

+

+[[package]]

+name = "aho-corasick"

+version = "1.1.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "8e60d3430d3a69478ad0993f19238d2df97c507009a52b3c10addcd7f6bcb916"

+dependencies = [

+ "memchr",

+]

+

+[[package]]

+name = "alloc-no-stdlib"

+version = "2.0.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "cc7bb162ec39d46ab1ca8c77bf72e890535becd1751bb45f64c597edb4c8c6b3"

+

+[[package]]

+name = "alloc-stdlib"

+version = "0.2.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "94fb8275041c72129eb51b7d0322c29b8387a0386127718b096429201a5d6ece"

+dependencies = [

+ "alloc-no-stdlib",

+]

+

+[[package]]

+name = "android-tzdata"

+version = "0.1.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "e999941b234f3131b00bc13c22d06e8c5ff726d1b6318ac7eb276997bbb4fef0"

+

+[[package]]

+name = "android_system_properties"

+version = "0.1.5"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "819e7219dbd41043ac279b19830f2efc897156490d7fd6ea916720117ee66311"

+dependencies = [

+ "libc",

+]

+

+[[package]]

+name = "arrow"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "d3a3ec4fe573f9d1f59d99c085197ef669b00b088ba1d7bb75224732d9357a74"

+dependencies = [

+ "arrow-arith",

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-cast",

+ "arrow-csv",

+ "arrow-data",

+ "arrow-ipc",

+ "arrow-json",

+ "arrow-ord",

+ "arrow-row",

+ "arrow-schema",

+ "arrow-select",

+ "arrow-string",

+]

+

+[[package]]

+name = "arrow-arith"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "6dcf19f07792d8c7f91086c67b574a79301e367029b17fcf63fb854332246a10"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "chrono",

+ "half",

+ "num",

+]

+

+[[package]]

+name = "arrow-array"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7845c32b41f7053e37a075b3c2f29c6f5ea1b3ca6e5df7a2d325ee6e1b4a63cf"

+dependencies = [

+ "ahash",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "chrono",

+ "half",

+ "hashbrown 0.15.5",

+ "num",

+]

+

+[[package]]

+name = "arrow-buffer"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "5b5c681a99606f3316f2a99d9c8b6fa3aad0b1d34d8f6d7a1b471893940219d8"

+dependencies = [

+ "bytes",

+ "half",

+ "num",

+]

+

+[[package]]

+name = "arrow-cast"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "6365f8527d4f87b133eeb862f9b8093c009d41a210b8f101f91aa2392f61daac"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "arrow-select",

+ "atoi",

+ "base64",

+ "chrono",

+ "half",

+ "lexical-core",

+ "num",

+ "ryu",

+]

+

+[[package]]

+name = "arrow-csv"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "30dac4d23ac769300349197b845e0fd18c7f9f15d260d4659ae6b5a9ca06f586"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-cast",

+ "arrow-data",

+ "arrow-schema",

+ "chrono",

+ "csv",

+ "csv-core",

+ "lazy_static",

+ "lexical-core",

+ "regex",

+]

+

+[[package]]

+name = "arrow-data"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "cd962fc3bf7f60705b25bcaa8eb3318b2545aa1d528656525ebdd6a17a6cd6fb"

+dependencies = [

+ "arrow-buffer",

+ "arrow-schema",

+ "half",

+ "num",

+]

+

+[[package]]

+name = "arrow-ipc"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "c3527365b24372f9c948f16e53738eb098720eea2093ae73c7af04ac5e30a39b"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-cast",

+ "arrow-data",

+ "arrow-schema",

+ "flatbuffers",

+]

+

+[[package]]

+name = "arrow-json"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "acdec0024749fc0d95e025c0b0266d78613727b3b3a5d4cf8ea47eb6d38afdd1"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-cast",

+ "arrow-data",

+ "arrow-schema",

+ "chrono",

+ "half",

+ "indexmap",

+ "lexical-core",

+ "num",

+ "serde",

+ "serde_json",

+]

+

+[[package]]

+name = "arrow-ord"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "79af2db0e62a508d34ddf4f76bfd6109b6ecc845257c9cba6f939653668f89ac"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "arrow-select",

+ "half",

+ "num",

+]

+

+[[package]]

+name = "arrow-row"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "da30e9d10e9c52f09ea0cf15086d6d785c11ae8dcc3ea5f16d402221b6ac7735"

+dependencies = [

+ "ahash",

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "half",

+]

+

+[[package]]

+name = "arrow-schema"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "35b0f9c0c3582dd55db0f136d3b44bfa0189df07adcf7dc7f2f2e74db0f52eb8"

+dependencies = [

+ "bitflags 2.9.4",

+]

+

+[[package]]

+name = "arrow-select"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "92fc337f01635218493c23da81a364daf38c694b05fc20569c3193c11c561984"

+dependencies = [

+ "ahash",

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "num",

+]

+

+[[package]]

+name = "arrow-string"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "d596a9fc25dae556672d5069b090331aca8acb93cae426d8b7dcdf1c558fa0ce"

+dependencies = [

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-data",

+ "arrow-schema",

+ "arrow-select",

+ "memchr",

+ "num",

+ "regex",

+ "regex-syntax",

+]

+

+[[package]]

+name = "atoi"

+version = "2.0.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f28d99ec8bfea296261ca1af174f24225171fea9664ba9003cbebee704810528"

+dependencies = [

+ "num-traits",

+]

+

+[[package]]

+name = "autocfg"

+version = "1.5.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "c08606f8c3cbf4ce6ec8e28fb0014a2c086708fe954eaa885384a6165172e7e8"

+

+[[package]]

+name = "base64"

+version = "0.22.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "72b3254f16251a8381aa12e40e3c4d2f0199f8c6508fbecb9d91f575e0fbb8c6"

+

+[[package]]

+name = "bitflags"

+version = "1.3.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "bef38d45163c2f1dde094a7dfd33ccf595c92905c8f8f4fdc18d06fb1037718a"

+

+[[package]]

+name = "bitflags"

+version = "2.9.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "2261d10cca569e4643e526d8dc2e62e433cc8aba21ab764233731f8d369bf394"

+

+[[package]]

+name = "brotli"

+version = "7.0.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "cc97b8f16f944bba54f0433f07e30be199b6dc2bd25937444bbad560bcea29bd"

+dependencies = [

+ "alloc-no-stdlib",

+ "alloc-stdlib",

+ "brotli-decompressor",

+]

+

+[[package]]

+name = "brotli-decompressor"

+version = "4.0.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "a334ef7c9e23abf0ce748e8cd309037da93e606ad52eb372e4ce327a0dcfbdfd"

+dependencies = [

+ "alloc-no-stdlib",

+ "alloc-stdlib",

+]

+

+[[package]]

+name = "bumpalo"

+version = "3.19.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "46c5e41b57b8bba42a04676d81cb89e9ee8e859a1a66f80a5a72e1cb76b34d43"

+

+[[package]]

+name = "byteorder"

+version = "1.5.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1fd0f2584146f6f2ef48085050886acf353beff7305ebd1ae69500e27c67f64b"

+

+[[package]]

+name = "bytes"

+version = "1.10.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "d71b6127be86fdcfddb610f7182ac57211d4b18a3e9c82eb2d17662f2227ad6a"

+

+[[package]]

+name = "cc"

+version = "1.2.38"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "80f41ae168f955c12fb8960b057d70d0ca153fb83182b57d86380443527be7e9"

+dependencies = [

+ "find-msvc-tools",

+ "jobserver",

+ "libc",

+ "shlex",

+]

+

+[[package]]

+name = "cesu8"

+version = "1.1.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "6d43a04d8753f35258c91f8ec639f792891f748a1edbd759cf1dcea3382ad83c"

+

+[[package]]

+name = "cfg-if"

+version = "1.0.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "2fd1289c04a9ea8cb22300a459a72a385d7c73d3259e2ed7dcb2af674838cfa9"

+

+[[package]]

+name = "chrono"

+version = "0.4.39"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7e36cc9d416881d2e24f9a963be5fb1cd90966419ac844274161d10488b3e825"

+dependencies = [

+ "android-tzdata",

+ "iana-time-zone",

+ "js-sys",

+ "num-traits",

+ "wasm-bindgen",

+ "windows-targets 0.52.6",

+]

+

+[[package]]

+name = "combine"

+version = "4.6.7"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "ba5a308b75df32fe02788e748662718f03fde005016435c444eea572398219fd"

+dependencies = [

+ "bytes",

+ "memchr",

+]

+

+[[package]]

+name = "const-random"

+version = "0.1.18"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "87e00182fe74b066627d63b85fd550ac2998d4b0bd86bfed477a0ae4c7c71359"

+dependencies = [

+ "const-random-macro",

+]

+

+[[package]]

+name = "const-random-macro"

+version = "0.1.16"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f9d839f2a20b0aee515dc581a6172f2321f96cab76c1a38a4c584a194955390e"

+dependencies = [

+ "getrandom 0.2.16",

+ "once_cell",

+ "tiny-keccak",

+]

+

+[[package]]

+name = "core-foundation-sys"

+version = "0.8.7"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "773648b94d0e5d620f64f280777445740e61fe701025087ec8b57f45c791888b"

+

+[[package]]

+name = "crc32fast"

+version = "1.5.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "9481c1c90cbf2ac953f07c8d4a58aa3945c425b7185c9154d67a65e4230da511"

+dependencies = [

+ "cfg-if",

+]

+

+[[package]]

+name = "crossbeam-utils"

+version = "0.8.21"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "d0a5c400df2834b80a4c3327b3aad3a4c4cd4de0629063962b03235697506a28"

+

+[[package]]

+name = "crunchy"

+version = "0.2.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "460fbee9c2c2f33933d720630a6a0bac33ba7053db5344fac858d4b8952d77d5"

+

+[[package]]

+name = "csv"

+version = "1.3.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "acdc4883a9c96732e4733212c01447ebd805833b7275a73ca3ee080fd77afdaf"

+dependencies = [

+ "csv-core",

+ "itoa",

+ "ryu",

+ "serde",

+]

+

+[[package]]

+name = "csv-core"

+version = "0.1.12"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7d02f3b0da4c6504f86e9cd789d8dbafab48c2321be74e9987593de5a894d93d"

+dependencies = [

+ "memchr",

+]

+

+[[package]]

+name = "dashmap"

+version = "7.0.0-rc2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "e4a1e35a65fe0538a60167f0ada6e195ad5d477f6ddae273943596d4a1a5730b"

+dependencies = [

+ "cfg-if",

+ "crossbeam-utils",

+ "equivalent",

+ "hashbrown 0.15.5",

+ "lock_api",

+ "parking_lot_core",

+]

+

+[[package]]

+name = "equivalent"

+version = "1.0.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "877a4ace8713b0bcf2a4e7eec82529c029f1d0619886d18145fea96c3ffe5c0f"

+

+[[package]]

+name = "find-msvc-tools"

+version = "0.1.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1ced73b1dacfc750a6db6c0a0c3a3853c8b41997e2e2c563dc90804ae6867959"

+

+[[package]]

+name = "flatbuffers"

+version = "24.12.23"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "4f1baf0dbf96932ec9a3038d57900329c015b0bfb7b63d904f3bc27e2b02a096"

+dependencies = [

+ "bitflags 1.3.2",

+ "rustc_version",

+]

+

+[[package]]

+name = "flate2"

+version = "1.1.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "4a3d7db9596fecd151c5f638c0ee5d5bd487b6e0ea232e5dc96d5250f6f94b1d"

+dependencies = [

+ "crc32fast",

+ "miniz_oxide",

+]

+

+[[package]]

+name = "getrandom"

+version = "0.2.16"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "335ff9f135e4384c8150d6f27c6daed433577f86b4750418338c01a1a2528592"

+dependencies = [

+ "cfg-if",

+ "libc",

+ "wasi 0.11.1+wasi-snapshot-preview1",

+]

+

+[[package]]

+name = "getrandom"

+version = "0.3.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "26145e563e54f2cadc477553f1ec5ee650b00862f0a58bcd12cbdc5f0ea2d2f4"

+dependencies = [

+ "cfg-if",

+ "libc",

+ "r-efi",

+ "wasi 0.14.7+wasi-0.2.4",

+]

+

+[[package]]

+name = "half"

+version = "2.6.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "459196ed295495a68f7d7fe1d84f6c4b7ff0e21fe3017b2f283c6fac3ad803c9"

+dependencies = [

+ "cfg-if",

+ "crunchy",

+ "num-traits",

+]

+

+[[package]]

+name = "hashbrown"

+version = "0.15.5"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "9229cfe53dfd69f0609a49f65461bd93001ea1ef889cd5529dd176593f5338a1"

+

+[[package]]

+name = "hashbrown"

+version = "0.16.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "5419bdc4f6a9207fbeba6d11b604d481addf78ecd10c11ad51e76c2f6482748d"

+

+[[package]]

+name = "iana-time-zone"

+version = "0.1.64"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "33e57f83510bb73707521ebaffa789ec8caf86f9657cad665b092b581d40e9fb"

+dependencies = [

+ "android_system_properties",

+ "core-foundation-sys",

+ "iana-time-zone-haiku",

+ "js-sys",

+ "log",

+ "wasm-bindgen",

+ "windows-core",

+]

+

+[[package]]

+name = "iana-time-zone-haiku"

+version = "0.1.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f31827a206f56af32e590ba56d5d2d085f558508192593743f16b2306495269f"

+dependencies = [

+ "cc",

+]

+

+[[package]]

+name = "indexmap"

+version = "2.11.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "4b0f83760fb341a774ed326568e19f5a863af4a952def8c39f9ab92fd95b88e5"

+dependencies = [

+ "equivalent",

+ "hashbrown 0.16.0",

+]

+

+[[package]]

+name = "integer-encoding"

+version = "3.0.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "8bb03732005da905c88227371639bf1ad885cc712789c011c31c5fb3ab3ccf02"

+

+[[package]]

+name = "itoa"

+version = "1.0.15"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "4a5f13b858c8d314ee3e8f639011f7ccefe71f97f96e50151fb991f267928e2c"

+

+[[package]]

+name = "jni"

+version = "0.21.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1a87aa2bb7d2af34197c04845522473242e1aa17c12f4935d5856491a7fb8c97"

+dependencies = [

+ "cesu8",

+ "cfg-if",

+ "combine",

+ "jni-sys",

+ "log",

+ "thiserror",

+ "walkdir",

+ "windows-sys 0.45.0",

+]

+

+[[package]]

+name = "jni-sys"

+version = "0.3.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "8eaf4bc02d17cbdd7ff4c7438cafcdf7fb9a4613313ad11b4f8fefe7d3fa0130"

+

+[[package]]

+name = "jobserver"

+version = "0.1.34"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "9afb3de4395d6b3e67a780b6de64b51c978ecf11cb9a462c66be7d4ca9039d33"

+dependencies = [

+ "getrandom 0.3.3",

+ "libc",

+]

+

+[[package]]

+name = "js-sys"

+version = "0.3.81"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "ec48937a97411dcb524a265206ccd4c90bb711fca92b2792c407f268825b9305"

+dependencies = [

+ "once_cell",

+ "wasm-bindgen",

+]

+

+[[package]]

+name = "lazy_static"

+version = "1.5.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "bbd2bcb4c963f2ddae06a2efc7e9f3591312473c50c6685e1f298068316e66fe"

+

+[[package]]

+name = "lexical-core"

+version = "1.0.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7d8d125a277f807e55a77304455eb7b1cb52f2b18c143b60e766c120bd64a594"

+dependencies = [

+ "lexical-parse-float",

+ "lexical-parse-integer",

+ "lexical-util",

+ "lexical-write-float",

+ "lexical-write-integer",

+]

+

+[[package]]

+name = "lexical-parse-float"

+version = "1.0.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "52a9f232fbd6f550bc0137dcb5f99ab674071ac2d690ac69704593cb4abbea56"

+dependencies = [

+ "lexical-parse-integer",

+ "lexical-util",

+]

+

+[[package]]

+name = "lexical-parse-integer"

+version = "1.0.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "9a7a039f8fb9c19c996cd7b2fcce303c1b2874fe1aca544edc85c4a5f8489b34"

+dependencies = [

+ "lexical-util",

+]

+

+[[package]]

+name = "lexical-util"

+version = "1.0.7"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "2604dd126bb14f13fb5d1bd6a66155079cb9fa655b37f875b3a742c705dbed17"

+

+[[package]]

+name = "lexical-write-float"

+version = "1.0.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "50c438c87c013188d415fbabbb1dceb44249ab81664efbd31b14ae55dabb6361"

+dependencies = [

+ "lexical-util",

+ "lexical-write-integer",

+]

+

+[[package]]

+name = "lexical-write-integer"

+version = "1.0.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "409851a618475d2d5796377cad353802345cba92c867d9fbcde9cf4eac4e14df"

+dependencies = [

+ "lexical-util",

+]

+

+[[package]]

+name = "libc"

+version = "0.2.176"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "58f929b4d672ea937a23a1ab494143d968337a5f47e56d0815df1e0890ddf174"

+

+[[package]]

+name = "libm"

+version = "0.2.15"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f9fbbcab51052fe104eb5e5d351cf728d30a5be1fe14d9be8a3b097481fb97de"

+

+[[package]]

+name = "lock_api"

+version = "0.4.13"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "96936507f153605bddfcda068dd804796c84324ed2510809e5b2a624c81da765"

+dependencies = [

+ "autocfg",

+ "scopeguard",

+]

+

+[[package]]

+name = "log"

+version = "0.4.28"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "34080505efa8e45a4b816c349525ebe327ceaa8559756f0356cba97ef3bf7432"

+

+[[package]]

+name = "lz4_flex"

+version = "0.11.5"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "08ab2867e3eeeca90e844d1940eab391c9dc5228783db2ed999acbc0a9ed375a"

+dependencies = [

+ "twox-hash 2.1.2",

+]

+

+[[package]]

+name = "memchr"

+version = "2.7.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f52b00d39961fc5b2736ea853c9cc86238e165017a493d1d5c8eac6bdc4cc273"

+

+[[package]]

+name = "miniz_oxide"

+version = "0.8.9"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1fa76a2c86f704bdb222d66965fb3d63269ce38518b83cb0575fca855ebb6316"

+dependencies = [

+ "adler2",

+]

+

+[[package]]

+name = "num"

+version = "0.4.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "35bd024e8b2ff75562e5f34e7f4905839deb4b22955ef5e73d2fea1b9813cb23"

+dependencies = [

+ "num-bigint",

+ "num-complex",

+ "num-integer",

+ "num-iter",

+ "num-rational",

+ "num-traits",

+]

+

+[[package]]

+name = "num-bigint"

+version = "0.4.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "a5e44f723f1133c9deac646763579fdb3ac745e418f2a7af9cd0c431da1f20b9"

+dependencies = [

+ "num-integer",

+ "num-traits",

+]

+

+[[package]]

+name = "num-complex"

+version = "0.4.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "73f88a1307638156682bada9d7604135552957b7818057dcef22705b4d509495"

+dependencies = [

+ "num-traits",

+]

+

+[[package]]

+name = "num-integer"

+version = "0.1.46"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7969661fd2958a5cb096e56c8e1ad0444ac2bbcd0061bd28660485a44879858f"

+dependencies = [

+ "num-traits",

+]

+

+[[package]]

+name = "num-iter"

+version = "0.1.45"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1429034a0490724d0075ebb2bc9e875d6503c3cf69e235a8941aa757d83ef5bf"

+dependencies = [

+ "autocfg",

+ "num-integer",

+ "num-traits",

+]

+

+[[package]]

+name = "num-rational"

+version = "0.4.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f83d14da390562dca69fc84082e73e548e1ad308d24accdedd2720017cb37824"

+dependencies = [

+ "num-bigint",

+ "num-integer",

+ "num-traits",

+]

+

+[[package]]

+name = "num-traits"

+version = "0.2.19"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "071dfc062690e90b734c0b2273ce72ad0ffa95f0c74596bc250dcfd960262841"

+dependencies = [

+ "autocfg",

+ "libm",

+]

+

+[[package]]

+name = "once_cell"

+version = "1.21.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "42f5e15c9953c5e4ccceeb2e7382a716482c34515315f7b03532b8b4e8393d2d"

+

+[[package]]

+name = "ordered-float"

+version = "2.10.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "68f19d67e5a2795c94e73e0bb1cc1a7edeb2e28efd39e2e1c9b7a40c1108b11c"

+dependencies = [

+ "num-traits",

+]

+

+[[package]]

+name = "parking_lot_core"

+version = "0.9.11"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "bc838d2a56b5b1a6c25f55575dfc605fabb63bb2365f6c2353ef9159aa69e4a5"

+dependencies = [

+ "cfg-if",

+ "libc",

+ "redox_syscall",

+ "smallvec",

+ "windows-targets 0.52.6",

+]

+

+[[package]]

+name = "parquet"

+version = "53.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "2f8cf58b29782a7add991f655ff42929e31a7859f5319e53db9e39a714cb113c"

+dependencies = [

+ "ahash",

+ "arrow-array",

+ "arrow-buffer",

+ "arrow-cast",

+ "arrow-data",

+ "arrow-ipc",

+ "arrow-schema",

+ "arrow-select",

+ "base64",

+ "brotli",

+ "bytes",

+ "chrono",

+ "flate2",

+ "half",

+ "hashbrown 0.15.5",

+ "lz4_flex",

+ "num",

+ "num-bigint",

+ "paste",

+ "seq-macro",

+ "snap",

+ "thrift",

+ "twox-hash 1.6.3",

+ "zstd",

+ "zstd-sys",

+]

+

+[[package]]

+name = "paste"

+version = "1.0.15"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "57c0d7b74b563b49d38dae00a0c37d4d6de9b432382b2892f0574ddcae73fd0a"

+

+[[package]]

+name = "pkg-config"

+version = "0.3.32"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7edddbd0b52d732b21ad9a5fab5c704c14cd949e5e9a1ec5929a24fded1b904c"

+

+[[package]]

+name = "proc-macro2"

+version = "1.0.101"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "89ae43fd86e4158d6db51ad8e2b80f313af9cc74f5c0e03ccb87de09998732de"

+dependencies = [

+ "unicode-ident",

+]

+

+[[package]]

+name = "quote"

+version = "1.0.40"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1885c039570dc00dcb4ff087a89e185fd56bae234ddc7f056a945bf36467248d"

+dependencies = [

+ "proc-macro2",

+]

+

+[[package]]

+name = "r-efi"

+version = "5.3.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "69cdb34c158ceb288df11e18b4bd39de994f6657d83847bdffdbd7f346754b0f"

+

+[[package]]

+name = "redox_syscall"

+version = "0.5.17"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "5407465600fb0548f1442edf71dd20683c6ed326200ace4b1ef0763521bb3b77"

+dependencies = [

+ "bitflags 2.9.4",

+]

+

+[[package]]

+name = "regex"

+version = "1.11.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "8b5288124840bee7b386bc413c487869b360b2b4ec421ea56425128692f2a82c"

+dependencies = [

+ "aho-corasick",

+ "memchr",

+ "regex-automata",

+ "regex-syntax",

+]

+

+[[package]]

+name = "regex-automata"

+version = "0.4.11"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "833eb9ce86d40ef33cb1306d8accf7bc8ec2bfea4355cbdebb3df68b40925cad"

+dependencies = [

+ "aho-corasick",

+ "memchr",

+ "regex-syntax",

+]

+

+[[package]]

+name = "regex-syntax"

+version = "0.8.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "caf4aa5b0f434c91fe5c7f1ecb6a5ece2130b02ad2a590589dda5146df959001"

+

+[[package]]

+name = "rust"

+version = "0.1.0"

+dependencies = [

+ "arrow",

+ "chrono",

+ "dashmap",

+ "jni",

+ "lazy_static",

+ "parquet",

+]

+

+[[package]]

+name = "rustc_version"

+version = "0.4.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "cfcb3a22ef46e85b45de6ee7e79d063319ebb6594faafcf1c225ea92ab6e9b92"

+dependencies = [

+ "semver",

+]

+

+[[package]]

+name = "rustversion"

+version = "1.0.22"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "b39cdef0fa800fc44525c84ccb54a029961a8215f9619753635a9c0d2538d46d"

+

+[[package]]

+name = "ryu"

+version = "1.0.20"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "28d3b2b1366ec20994f1fd18c3c594f05c5dd4bc44d8bb0c1c632c8d6829481f"

+

+[[package]]

+name = "same-file"

+version = "1.0.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "93fc1dc3aaa9bfed95e02e6eadabb4baf7e3078b0bd1b4d7b6b0b68378900502"

+dependencies = [

+ "winapi-util",

+]

+

+[[package]]

+name = "scopeguard"

+version = "1.2.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "94143f37725109f92c262ed2cf5e59bce7498c01bcc1502d7b9afe439a4e9f49"

+

+[[package]]

+name = "semver"

+version = "1.0.27"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "d767eb0aabc880b29956c35734170f26ed551a859dbd361d140cdbeca61ab1e2"

+

+[[package]]

+name = "seq-macro"

+version = "0.3.6"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1bc711410fbe7399f390ca1c3b60ad0f53f80e95c5eb935e52268a0e2cd49acc"

+

+[[package]]

+name = "serde"

+version = "1.0.226"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "0dca6411025b24b60bfa7ec1fe1f8e710ac09782dca409ee8237ba74b51295fd"

+dependencies = [

+ "serde_core",

+]

+

+[[package]]

+name = "serde_core"

+version = "1.0.226"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "ba2ba63999edb9dac981fb34b3e5c0d111a69b0924e253ed29d83f7c99e966a4"

+dependencies = [

+ "serde_derive",

+]

+

+[[package]]

+name = "serde_derive"

+version = "1.0.226"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "8db53ae22f34573731bafa1db20f04027b2d25e02d8205921b569171699cdb33"

+dependencies = [

+ "proc-macro2",

+ "quote",

+ "syn",

+]

+

+[[package]]

+name = "serde_json"

+version = "1.0.145"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "402a6f66d8c709116cf22f558eab210f5a50187f702eb4d7e5ef38d9a7f1c79c"

+dependencies = [

+ "itoa",

+ "memchr",

+ "ryu",

+ "serde",

+ "serde_core",

+]

+

+[[package]]

+name = "shlex"

+version = "1.3.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "0fda2ff0d084019ba4d7c6f371c95d8fd75ce3524c3cb8fb653a3023f6323e64"

+

+[[package]]

+name = "smallvec"

+version = "1.15.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "67b1b7a3b5fe4f1376887184045fcf45c69e92af734b7aaddc05fb777b6fbd03"

+

+[[package]]

+name = "snap"

+version = "1.1.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "1b6b67fb9a61334225b5b790716f609cd58395f895b3fe8b328786812a40bc3b"

+

+[[package]]

+name = "static_assertions"

+version = "1.1.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "a2eb9349b6444b326872e140eb1cf5e7c522154d69e7a0ffb0fb81c06b37543f"

+

+[[package]]

+name = "syn"

+version = "2.0.106"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "ede7c438028d4436d71104916910f5bb611972c5cfd7f89b8300a8186e6fada6"

+dependencies = [

+ "proc-macro2",

+ "quote",

+ "unicode-ident",

+]

+

+[[package]]

+name = "thiserror"

+version = "1.0.69"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "b6aaf5339b578ea85b50e080feb250a3e8ae8cfcdff9a461c9ec2904bc923f52"

+dependencies = [

+ "thiserror-impl",

+]

+

+[[package]]

+name = "thiserror-impl"

+version = "1.0.69"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "4fee6c4efc90059e10f81e6d42c60a18f76588c3d74cb83a0b242a2b6c7504c1"

+dependencies = [

+ "proc-macro2",

+ "quote",

+ "syn",

+]

+

+[[package]]

+name = "thrift"

+version = "0.17.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7e54bc85fc7faa8bc175c4bab5b92ba8d9a3ce893d0e9f42cc455c8ab16a9e09"

+dependencies = [

+ "byteorder",

+ "integer-encoding",

+ "ordered-float",

+]

+

+[[package]]

+name = "tiny-keccak"

+version = "2.0.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "2c9d3793400a45f954c52e73d068316d76b6f4e36977e3fcebb13a2721e80237"

+dependencies = [

+ "crunchy",

+]

+

+[[package]]

+name = "twox-hash"

+version = "1.6.3"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "97fee6b57c6a41524a810daee9286c02d7752c4253064d0b05472833a438f675"

+dependencies = [

+ "cfg-if",

+ "static_assertions",

+]

+

+[[package]]

+name = "twox-hash"

+version = "2.1.2"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "9ea3136b675547379c4bd395ca6b938e5ad3c3d20fad76e7fe85f9e0d011419c"

+

+[[package]]

+name = "unicode-ident"

+version = "1.0.19"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "f63a545481291138910575129486daeaf8ac54aee4387fe7906919f7830c7d9d"

+

+[[package]]

+name = "version_check"

+version = "0.9.5"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "0b928f33d975fc6ad9f86c8f283853ad26bdd5b10b7f1542aa2fa15e2289105a"

+

+[[package]]

+name = "walkdir"

+version = "2.5.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "29790946404f91d9c5d06f9874efddea1dc06c5efe94541a7d6863108e3a5e4b"

+dependencies = [

+ "same-file",

+ "winapi-util",

+]

+

+[[package]]

+name = "wasi"

+version = "0.11.1+wasi-snapshot-preview1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "ccf3ec651a847eb01de73ccad15eb7d99f80485de043efb2f370cd654f4ea44b"

+

+[[package]]

+name = "wasi"

+version = "0.14.7+wasi-0.2.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "883478de20367e224c0090af9cf5f9fa85bed63a95c1abf3afc5c083ebc06e8c"

+dependencies = [

+ "wasip2",

+]

+

+[[package]]

+name = "wasip2"

+version = "1.0.1+wasi-0.2.4"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "0562428422c63773dad2c345a1882263bbf4d65cf3f42e90921f787ef5ad58e7"

+dependencies = [

+ "wit-bindgen",

+]

+

+[[package]]

+name = "wasm-bindgen"

+version = "0.2.104"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "c1da10c01ae9f1ae40cbfac0bac3b1e724b320abfcf52229f80b547c0d250e2d"

+dependencies = [

+ "cfg-if",

+ "once_cell",

+ "rustversion",

+ "wasm-bindgen-macro",

+ "wasm-bindgen-shared",

+]

+

+[[package]]

+name = "wasm-bindgen-backend"

+version = "0.2.104"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "671c9a5a66f49d8a47345ab942e2cb93c7d1d0339065d4f8139c486121b43b19"

+dependencies = [

+ "bumpalo",

+ "log",

+ "proc-macro2",

+ "quote",

+ "syn",

+ "wasm-bindgen-shared",

+]

+

+[[package]]

+name = "wasm-bindgen-macro"

+version = "0.2.104"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7ca60477e4c59f5f2986c50191cd972e3a50d8a95603bc9434501cf156a9a119"

+dependencies = [

+ "quote",

+ "wasm-bindgen-macro-support",

+]

+

+[[package]]

+name = "wasm-bindgen-macro-support"

+version = "0.2.104"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "9f07d2f20d4da7b26400c9f4a0511e6e0345b040694e8a75bd41d578fa4421d7"

+dependencies = [

+ "proc-macro2",

+ "quote",

+ "syn",

+ "wasm-bindgen-backend",

+ "wasm-bindgen-shared",

+]

+

+[[package]]

+name = "wasm-bindgen-shared"

+version = "0.2.104"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "bad67dc8b2a1a6e5448428adec4c3e84c43e561d8c9ee8a9e5aabeb193ec41d1"

+dependencies = [

+ "unicode-ident",

+]

+

+[[package]]

+name = "winapi-util"

+version = "0.1.11"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "c2a7b1c03c876122aa43f3020e6c3c3ee5c05081c9a00739faf7503aeba10d22"

+dependencies = [

+ "windows-sys 0.61.0",

+]

+

+[[package]]

+name = "windows-core"

+version = "0.62.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "57fe7168f7de578d2d8a05b07fd61870d2e73b4020e9f49aa00da8471723497c"

+dependencies = [

+ "windows-implement",

+ "windows-interface",

+ "windows-link",

+ "windows-result",

+ "windows-strings",

+]

+

+[[package]]

+name = "windows-implement"

+version = "0.60.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "a47fddd13af08290e67f4acabf4b459f647552718f683a7b415d290ac744a836"

+dependencies = [

+ "proc-macro2",

+ "quote",

+ "syn",

+]

+

+[[package]]

+name = "windows-interface"

+version = "0.59.1"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "bd9211b69f8dcdfa817bfd14bf1c97c9188afa36f4750130fcdf3f400eca9fa8"

+dependencies = [

+ "proc-macro2",

+ "quote",

+ "syn",

+]

+

+[[package]]

+name = "windows-link"

+version = "0.2.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "45e46c0661abb7180e7b9c281db115305d49ca1709ab8242adf09666d2173c65"

+

+[[package]]

+name = "windows-result"

+version = "0.4.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7084dcc306f89883455a206237404d3eaf961e5bd7e0f312f7c91f57eb44167f"

+dependencies = [

+ "windows-link",

+]

+

+[[package]]

+name = "windows-strings"

+version = "0.5.0"

+source = "registry+https://github.com/rust-lang/crates.io-index"

+checksum = "7218c655a553b0bed4426cf54b20d7ba363ef543b52d515b3e48d7fd55318dda"

+dependencies = [