diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

new file mode 100644

index 00000000..58bfecda

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/bug_report.md

@@ -0,0 +1,32 @@

+---

+name: Bug report

+about: Create a report to help us improve

+title: ''

+labels: ''

+assignees: ''

+

+---

+

+**Describe the bug**

+A clear and concise description of what the bug is.

+

+**To Reproduce**

+Steps to reproduce the behavior:

+1. Go to our [off-the-shelf samples](https://github.com/AzureAD/microsoft-authentication-library-for-python/tree/dev/sample) and pick one that is closest to your usage scenario. You should not need to modify the sample.

+2. Follow the description of the sample, typically at the beginning of it, to prepare a `config.json` containing your test configurations

+3. Run such sample, typically by `python sample.py config.json`

+4. See the error

+5. In this bug report, tell us the sample you choose, paste the content of the config.json with your test setup (which you can choose to skip your credentials, and/or mail it to our developer's email).

+

+**Expected behavior**

+A clear and concise description of what you expected to happen.

+

+**What you see instead**

+Paste the sample output, or add screenshots to help explain your problem.

+

+**The MSAL Python version you are using**

+Paste the output of this

+`python -c "import msal; print(msal.__version__)"`

+

+**Additional context**

+Add any other context about the problem here.

diff --git a/.github/workflows/python-package.yml b/.github/workflows/python-package.yml

new file mode 100644

index 00000000..461eb959

--- /dev/null

+++ b/.github/workflows/python-package.yml

@@ -0,0 +1,123 @@

+# This workflow will install Python dependencies, run tests and lint with a variety of Python versions

+# For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

+

+name: CI/CD

+

+on:

+ push:

+ pull_request:

+ branches: [ dev ]

+

+ # This guards against unknown PR until a community member vet it and label it.

+ types: [ labeled ]

+

+jobs:

+ ci:

+ env:

+ # Fake a TRAVIS env so that the pre-existing test cases would behave like before

+ TRAVIS: true

+ LAB_APP_CLIENT_ID: ${{ secrets.LAB_APP_CLIENT_ID }}

+ LAB_APP_CLIENT_SECRET: ${{ secrets.LAB_APP_CLIENT_SECRET }}

+ LAB_OBO_CLIENT_SECRET: ${{ secrets.LAB_OBO_CLIENT_SECRET }}

+ LAB_OBO_CONFIDENTIAL_CLIENT_ID: ${{ secrets.LAB_OBO_CONFIDENTIAL_CLIENT_ID }}

+ LAB_OBO_PUBLIC_CLIENT_ID: ${{ secrets.LAB_OBO_PUBLIC_CLIENT_ID }}

+

+ # Derived from https://docs.github.com/en/actions/guides/building-and-testing-python#starting-with-the-python-workflow-template

+ runs-on: ubuntu-latest # It switched to 22.04 shortly after 2022-Nov-8

+ strategy:

+ matrix:

+ python-version: [3.7, 3.8, 3.9, "3.10", "3.11", "3.12"]

+

+ steps:

+ - uses: actions/checkout@v4

+ - name: Set up Python ${{ matrix.python-version }}

+ uses: actions/setup-python@v4

+ # It automatically takes care of pip cache, according to

+ # https://docs.github.com/en/actions/using-workflows/caching-dependencies-to-speed-up-workflows#about-caching-workflow-dependencies

+ with:

+ python-version: ${{ matrix.python-version }}

+

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ python -m pip install flake8 pytest

+ if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

+ - name: Test with pytest

+ run: pytest --benchmark-skip

+ - name: Lint with flake8

+ run: |

+ # stop the build if there are Python syntax errors or undefined names

+ #flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

+ # exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

+ #flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

+

+ cb:

+ # Benchmark only after the correctness has been tested by CI,

+ # and then run benchmark only once (sampling with only one Python version).

+ needs: ci

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - name: Set up Python 3.9

+ uses: actions/setup-python@v4

+ with:

+ python-version: 3.9

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

+ - name: Setup an updatable cache for Performance Baselines

+ uses: actions/cache@v3

+ with:

+ path: .perf.baseline

+ key: ${{ runner.os }}-performance-${{ hashFiles('tests/test_benchmark.py') }}

+ restore-keys: ${{ runner.os }}-performance-

+ - name: Run benchmark

+ run: pytest --benchmark-only --benchmark-json benchmark.json --log-cli-level INFO tests/test_benchmark.py

+ - name: Render benchmark result

+ uses: benchmark-action/github-action-benchmark@v1

+ with:

+ tool: 'pytest'

+ output-file-path: benchmark.json

+ fail-on-alert: true

+ - name: Publish Gibhub Pages

+ run: git push origin gh-pages

+

+ cd:

+ needs: ci

+ # Note: github.event.pull_request.draft == false WON'T WORK in "if" statement,

+ # because the triggered event is a push, not a pull_request.

+ # This means each commit will trigger a release on TestPyPI.

+ # Those releases will only succeed when each push has a new version number: a1, a2, a3, etc.

+ if: |

+ github.event_name == 'push' &&

+ (

+ startsWith(github.ref, 'refs/tags') ||

+ startsWith(github.ref, 'refs/heads/release-')

+ )

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - name: Set up Python 3.9

+ uses: actions/setup-python@v4

+ with:

+ python-version: 3.9

+ - name: Build a package for release

+ run: |

+ python -m pip install build --user

+ python -m build --sdist --wheel --outdir dist/ .

+ - name: |

+ Publish to TestPyPI when pushing to release-* branch.

+ You better test with a1, a2, b1, b2 releases first.

+ uses: pypa/gh-action-pypi-publish@v1.4.2

+ if: startsWith(github.ref, 'refs/heads/release-')

+ with:

+ user: __token__

+ password: ${{ secrets.TEST_PYPI_API_TOKEN }}

+ repository_url: https://test.pypi.org/legacy/

+ - name: Publish to PyPI when tagged

+ if: startsWith(github.ref, 'refs/tags')

+ uses: pypa/gh-action-pypi-publish@v1.4.2

+ with:

+ user: __token__

+ password: ${{ secrets.PYPI_API_TOKEN }}

diff --git a/.gitignore b/.gitignore

index eb93430d..36b43713 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1,48 +1,62 @@

-.DS_Store

-*.py[co]

+# Python cache

+__pycache__/

+*.pyc

-# Packages

-*.egg

-*.egg-info

-dist

-build

-eggs

-parts

-var

-sdist

-develop-eggs

-.installed.cfg

+# PTVS analysis

+.ptvs/

+*.pyproj

-# Installer logs

-pip-log.txt

+# Build results

+/bin/

+/obj/

+/dist/

+/MANIFEST

-# Unit test / coverage reports

-.coverage

-.tox

+# Result of running python setup.py install/pip install -e

+/build/

+/msal.egg-info/

-#Translations

-*.mo

+# Test results

+/TestResults/

-#Mr Developer

-.mr.developer.cfg

+# User-specific files

+*.suo

+*.user

+*.sln.docstates

+/tests/config.py

-# Emacs backup files

-*~

+# Windows image file caches

+Thumbs.db

+ehthumbs.db

-# IDEA / PyCharm IDE

-.idea/

+# Folder config file

+Desktop.ini

-# vim

-*.vim

-*.swp

+# Recycle Bin used on file shares

+$RECYCLE.BIN/

+

+# Mac desktop service store files

+.DS_Store

-# Virtualenvs

-env*

+.idea

+src/build

+*.iml

+/doc/_build

-docs/source/reference/services

-tests/coverage.xml

-tests/nosetests.xml

+# Virtual Environments

+/env*

+.venv/

+docs/_build/

+# Visual Studio Files

+/.vs/*

+/tests/.vs/*

+

+# vim files

+*.swp

# The test configuration file(s) could potentially contain credentials

tests/config.json

+

+.env

+.perf.baseline

diff --git a/.travis.yml b/.travis.yml

new file mode 100644

index 00000000..85917242

--- /dev/null

+++ b/.travis.yml

@@ -0,0 +1,46 @@

+sudo: false

+language: python

+python:

+ - "2.7"

+ - "3.5"

+ - "3.6"

+# Borrowed from https://github.com/travis-ci/travis-ci/issues/9815

+# Enable 3.7 without globally enabling sudo and dist: xenial for other build jobs

+matrix:

+ include:

+ - python: 3.7

+ dist: xenial

+ sudo: true

+ - python: 3.8

+ dist: xenial

+ sudo: true

+

+install:

+ - pip install -r requirements.txt

+script:

+ - python -m unittest discover -s tests

+

+deploy:

+ - # test pypi

+ provider: pypi

+ distributions: "sdist bdist_wheel"

+ server: https://test.pypi.org/legacy/

+ user: "nugetaad"

+ password:

+ secure: KkjKySJujYxx31B15mlAZr2Jo4P99LcrMj3uON/X/WMXAqYVcVsYJ6JSzUvpNnCAgk+1hc24Qp6nibQHV824yiK+eG4qV+lpzkEEedkRx6NOW/h09OkT+pOSVMs0kcIhz7FzqChpl+jf6ZZpb13yJpQg2LoZIA4g8UdYHHFidWt4m5u1FZ9LPCqQ0OT3gnKK4qb0HIDaECfz5GYzrelLLces0PPwj1+X5eb38xUVtbkA1UJKLGKI882D8Rq5eBdbnDGsfDnF6oU+EBnGZ7o6HVQLdBgagDoVdx7yoXyntULeNxTENMTOZJEJbncQwxRgeEqJWXTTEW57O6Jo5uiHEpJA9lAePlRbS+z6BPDlnQogqOdTsYS0XMfOpYE0/r3cbtPUjETOmGYQxjQzfrFBfM7jaWnUquymZRYqCQ66VDo3I/ykNOCoM9qTmWt5L/MFfOZyoxLHnDThZBdJ3GXHfbivg+v+vOfY1gG8e2H2lQY+/LIMIJibF+MS4lJgrB81dcNdBzyxMNByuWQjSL1TY7un0QzcRcZz2NLrFGg8+9d67LQq4mK5ySimc6zdgnanuROU02vGr1EApT6D/qUItiulFgWqInNKrFXE9q74UP/WSooZPoLa3Du8y5s4eKerYYHQy5eSfIC8xKKDU8MSgoZhwQhCUP46G9Nsty0PYQc=

+ on:

+ branch: master

+ tags: false

+ condition: $TRAVIS_PYTHON_VERSION = "2.7"

+

+ - # production pypi

+ provider: pypi

+ distributions: "sdist bdist_wheel"

+ user: "nugetaad"

+ password:

+ secure: KkjKySJujYxx31B15mlAZr2Jo4P99LcrMj3uON/X/WMXAqYVcVsYJ6JSzUvpNnCAgk+1hc24Qp6nibQHV824yiK+eG4qV+lpzkEEedkRx6NOW/h09OkT+pOSVMs0kcIhz7FzqChpl+jf6ZZpb13yJpQg2LoZIA4g8UdYHHFidWt4m5u1FZ9LPCqQ0OT3gnKK4qb0HIDaECfz5GYzrelLLces0PPwj1+X5eb38xUVtbkA1UJKLGKI882D8Rq5eBdbnDGsfDnF6oU+EBnGZ7o6HVQLdBgagDoVdx7yoXyntULeNxTENMTOZJEJbncQwxRgeEqJWXTTEW57O6Jo5uiHEpJA9lAePlRbS+z6BPDlnQogqOdTsYS0XMfOpYE0/r3cbtPUjETOmGYQxjQzfrFBfM7jaWnUquymZRYqCQ66VDo3I/ykNOCoM9qTmWt5L/MFfOZyoxLHnDThZBdJ3GXHfbivg+v+vOfY1gG8e2H2lQY+/LIMIJibF+MS4lJgrB81dcNdBzyxMNByuWQjSL1TY7un0QzcRcZz2NLrFGg8+9d67LQq4mK5ySimc6zdgnanuROU02vGr1EApT6D/qUItiulFgWqInNKrFXE9q74UP/WSooZPoLa3Du8y5s4eKerYYHQy5eSfIC8xKKDU8MSgoZhwQhCUP46G9Nsty0PYQc=

+ on:

+ branch: master

+ tags: true

+ condition: $TRAVIS_PYTHON_VERSION = "2.7"

+

diff --git a/LICENSE b/LICENSE

index 844a00f9..e7a9ff04 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,7 +1,6 @@

The MIT License (MIT)

-Copyright (c) 2021 Ray Luo

-Copyright (c) 2018-2021 Microsoft Corporation.

+Copyright (c) Microsoft Corporation.

All rights reserved.

This code is licensed under the MIT License.

@@ -22,4 +21,4 @@ FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT.IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

-THE SOFTWARE.

+THE SOFTWARE.

\ No newline at end of file

diff --git a/README.md b/README.md

new file mode 100644

index 00000000..9d72fdfe

--- /dev/null

+++ b/README.md

@@ -0,0 +1,147 @@

+# Microsoft Authentication Library (MSAL) for Python

+

+| `dev` branch | Reference Docs | # of Downloads per different platforms | # of Downloads per recent MSAL versions | Benchmark Diagram |

+|:------------:|:--------------:|:--------------------------------------:|:---------------------------------------:|:-----------------:|

+ [](https://github.com/AzureAD/microsoft-authentication-library-for-python/actions) | [](https://msal-python.readthedocs.io/en/latest/?badge=latest) | [](https://pypistats.org/packages/msal) | [](https://pepy.tech/project/msal) | [📉](https://azuread.github.io/microsoft-authentication-library-for-python/dev/bench/)

+

+The Microsoft Authentication Library for Python enables applications to integrate with the [Microsoft identity platform](https://aka.ms/aaddevv2). It allows you to sign in users or apps with Microsoft identities ([Azure AD](https://azure.microsoft.com/services/active-directory/), [Microsoft Accounts](https://account.microsoft.com) and [Azure AD B2C](https://azure.microsoft.com/services/active-directory-b2c/) accounts) and obtain tokens to call Microsoft APIs such as [Microsoft Graph](https://graph.microsoft.io/) or your own APIs registered with the Microsoft identity platform. It is built using industry standard OAuth2 and OpenID Connect protocols

+

+Not sure whether this is the SDK you are looking for your app? There are other Microsoft Identity SDKs

+[here](https://github.com/AzureAD/microsoft-authentication-library-for-python/wiki/Microsoft-Authentication-Client-Libraries).

+

+Quick links:

+

+| [Getting Started](https://learn.microsoft.com/azure/active-directory/develop/web-app-quickstart?pivots=devlang-python)| [Docs](https://github.com/AzureAD/microsoft-authentication-library-for-python/wiki) | [Samples](https://aka.ms/aaddevsamplesv2) | [Support](README.md#community-help-and-support) | [Feedback](https://forms.office.com/r/TMjZkDbzjY) |

+| --- | --- | --- | --- | --- |

+

+## Scenarios supported

+

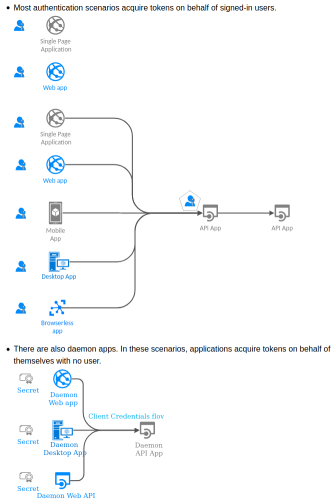

+Click on the following thumbnail to visit a large map with clickable links to proper samples.

+

+[](https://msal-python.readthedocs.io/en/latest/)

+

+## Installation

+

+You can find MSAL Python on [Pypi](https://pypi.org/project/msal/).

+1. If you haven't already, [install and/or upgrade the pip](https://pip.pypa.io/en/stable/installing/)

+ of your Python environment to a recent version. We tested with pip 18.1.

+2. As usual, just run `pip install msal`.

+

+## Versions

+

+This library follows [Semantic Versioning](http://semver.org/).

+

+You can find the changes for each version under

+[Releases](https://github.com/AzureAD/microsoft-authentication-library-for-python/releases).

+

+## Usage

+

+Before using MSAL Python (or any MSAL SDKs, for that matter), you will have to

+[register your application with the Microsoft identity platform](https://docs.microsoft.com/azure/active-directory/develop/quickstart-v2-register-an-app).

+

+Acquiring tokens with MSAL Python follows this 3-step pattern.

+(Note: That is the high level conceptual pattern.

+There will be some variations for different flows. They are demonstrated in

+[runnable samples hosted right in this repo](https://github.com/AzureAD/microsoft-authentication-library-for-python/tree/dev/sample).

+)

+

+

+1. MSAL proposes a clean separation between

+ [public client applications, and confidential client applications](https://tools.ietf.org/html/rfc6749#section-2.1).

+ So you will first create either a `PublicClientApplication` or a `ConfidentialClientApplication` instance,

+ and ideally reuse it during the lifecycle of your app. The following example shows a `PublicClientApplication`:

+

+ ```python

+ from msal import PublicClientApplication

+ app = PublicClientApplication(

+ "your_client_id",

+ authority="https://login.microsoftonline.com/Enter_the_Tenant_Name_Here")

+ ```

+

+ Later, each time you would want an access token, you start by:

+ ```python

+ result = None # It is just an initial value. Please follow instructions below.

+ ```

+

+2. The API model in MSAL provides you explicit control on how to utilize token cache.

+ This cache part is technically optional, but we highly recommend you to harness the power of MSAL cache.

+ It will automatically handle the token refresh for you.

+

+ ```python

+ # We now check the cache to see

+ # whether we already have some accounts that the end user already used to sign in before.

+ accounts = app.get_accounts()

+ if accounts:

+ # If so, you could then somehow display these accounts and let end user choose

+ print("Pick the account you want to use to proceed:")

+ for a in accounts:

+ print(a["username"])

+ # Assuming the end user chose this one

+ chosen = accounts[0]

+ # Now let's try to find a token in cache for this account

+ result = app.acquire_token_silent(["your_scope"], account=chosen)

+ ```

+

+3. Either there is no suitable token in the cache, or you chose to skip the previous step,

+ now it is time to actually send a request to AAD to obtain a token.

+ There are different methods based on your client type and scenario. Here we demonstrate a placeholder flow.

+

+ ```python

+ if not result:

+ # So no suitable token exists in cache. Let's get a new one from AAD.

+ result = app.acquire_token_by_one_of_the_actual_method(..., scopes=["User.Read"])

+ if "access_token" in result:

+ print(result["access_token"]) # Yay!

+ else:

+ print(result.get("error"))

+ print(result.get("error_description"))

+ print(result.get("correlation_id")) # You may need this when reporting a bug

+ ```

+

+Refer the [Wiki](https://github.com/AzureAD/microsoft-authentication-library-for-python/wiki) pages for more details on the MSAL Python functionality and usage.

+

+## Migrating from ADAL

+

+If your application is using ADAL Python, we recommend you to update to use MSAL Python. No new feature work will be done in ADAL Python.

+

+See the [ADAL to MSAL migration](https://github.com/AzureAD/microsoft-authentication-library-for-python/wiki/Migrate-to-MSAL-Python) guide.

+

+## Roadmap

+

+You can follow the latest updates and plans for MSAL Python in the [Roadmap](https://github.com/AzureAD/microsoft-authentication-library-for-python/wiki/Roadmap) published on our Wiki.

+

+## Samples and Documentation

+

+MSAL Python supports multiple [application types and authentication scenarios](https://docs.microsoft.com/azure/active-directory/develop/authentication-flows-app-scenarios).

+The generic documents on

+[Auth Scenarios](https://docs.microsoft.com/azure/active-directory/develop/authentication-scenarios)

+and

+[Auth protocols](https://docs.microsoft.com/azure/active-directory/develop/active-directory-v2-protocols)

+are recommended reading.

+

+We provide a [full suite of sample applications](https://aka.ms/aaddevsamplesv2) and [documentation](https://aka.ms/aaddevv2) to help you get started with learning the Microsoft identity platform.

+

+## Community Help and Support

+

+We leverage Stack Overflow to work with the community on supporting Azure Active Directory and its SDKs, including this one!

+We highly recommend you ask your questions on Stack Overflow (we're all on there!)

+Also browser existing issues to see if someone has had your question before.

+

+We recommend you use the "msal" tag so we can see it!

+Here is the latest Q&A on Stack Overflow for MSAL:

+[http://stackoverflow.com/questions/tagged/msal](http://stackoverflow.com/questions/tagged/msal)

+

+## Submit Feedback

+We'd like your thoughts on this library. Please complete [this short survey.](https://forms.office.com/r/TMjZkDbzjY)

+

+## Security Reporting

+

+If you find a security issue with our libraries or services please report it to [secure@microsoft.com](mailto:secure@microsoft.com) with as much detail as possible. Your submission may be eligible for a bounty through the [Microsoft Bounty](http://aka.ms/bugbounty) program. Please do not post security issues to GitHub Issues or any other public site. We will contact you shortly upon receiving the information. We encourage you to get notifications of when security incidents occur by visiting [this page](https://technet.microsoft.com/security/dd252948) and subscribing to Security Advisory Alerts.

+

+## Contributing

+

+All code is licensed under the MIT license and we triage actively on GitHub. We enthusiastically welcome contributions and feedback. Please read the [contributing guide](./contributing.md) before starting.

+

+## We Value and Adhere to the Microsoft Open Source Code of Conduct

+

+This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/). For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

diff --git a/contributing.md b/contributing.md

new file mode 100644

index 00000000..e78c1ce1

--- /dev/null

+++ b/contributing.md

@@ -0,0 +1,122 @@

+# CONTRIBUTING

+

+Azure Active Directory SDK projects welcomes new contributors. This document will guide you

+through the process.

+

+### CONTRIBUTOR LICENSE AGREEMENT

+

+Please visit [https://cla.microsoft.com/](https://cla.microsoft.com/) and sign the Contributor License

+Agreement. You only need to do that once. We can not look at your code until you've submitted this request.

+

+

+### FORK

+

+Fork this project on GitHub and check out your copy.

+

+Example for Project Foo (which can be any ADAL or MSAL or just any library):

+

+```

+$ git clone git@github.com:username/project-foo.git

+$ cd project-foo

+$ git remote add upstream git@github.com:AzureAD/project-foo.git

+```

+

+No need to decide if you want your feature or bug fix to go into the dev branch

+or the master branch. **All bug fixes and new features should go into the dev branch.**

+

+The master branch is effectively frozen; patches that change the SDKs

+protocols or API surface area or affect the run-time behavior of the SDK will be rejected.

+

+Some of our SDKs have bundled dependencies that are not part of the project proper.

+Any changes to files in those directories or its subdirectories should be sent to their respective projects.

+Do not send your patch to us, we cannot accept it.

+

+In case of doubt, open an issue in the [issue tracker](issues).

+

+Especially do so if you plan to work on a major change in functionality. Nothing is more

+frustrating than seeing your hard work go to waste because your vision

+does not align with our goals for the SDK.

+

+

+### BRANCH

+

+Okay, so you have decided on the proper branch. Create a feature branch

+and start hacking:

+

+```

+$ git checkout -b my-feature-branch

+```

+

+### COMMIT

+

+Make sure git knows your name and email address:

+

+```

+$ git config --global user.name "J. Random User"

+$ git config --global user.email "j.random.user@example.com"

+```

+

+Writing good commit logs is important. A commit log should describe what

+changed and why. Follow these guidelines when writing one:

+

+1. The first line should be 50 characters or less and contain a short

+ description of the change prefixed with the name of the changed

+ subsystem (e.g. "net: add localAddress and localPort to Socket").

+2. Keep the second line blank.

+3. Wrap all other lines at 72 columns.

+

+A good commit log looks like this:

+

+```

+fix: explaining the commit in one line

+

+Body of commit message is a few lines of text, explaining things

+in more detail, possibly giving some background about the issue

+being fixed, etc etc.

+

+The body of the commit message can be several paragraphs, and

+please do proper word-wrap and keep columns shorter than about

+72 characters or so. That way `git log` will show things

+nicely even when it is indented.

+```

+

+The header line should be meaningful; it is what other people see when they

+run `git shortlog` or `git log --oneline`.

+

+Check the output of `git log --oneline files_that_you_changed` to find out

+what directories your changes touch.

+

+

+### REBASE

+

+Use `git rebase` (not `git merge`) to sync your work from time to time.

+

+```

+$ git fetch upstream

+$ git rebase upstream/v0.1 # or upstream/master

+```

+

+

+### TEST

+

+Bug fixes and features should come with tests. Add your tests in the

+test directory. This varies by repository but often follows the same convention of /src/test. Look at other tests to see how they should be

+structured (license boilerplate, common includes, etc.).

+

+

+Make sure that all tests pass.

+

+

+### PUSH

+

+```

+$ git push origin my-feature-branch

+```

+

+Go to https://github.com/username/microsoft-authentication-library-for-***.git and select your feature branch. Click

+the 'Pull Request' button and fill out the form.

+

+Pull requests are usually reviewed within a few days. If there are comments

+to address, apply your changes in a separate commit and push that to your

+feature branch. Post a comment in the pull request afterwards; GitHub does

+not send out notifications when you add commits.

diff --git a/docs/Makefile b/docs/Makefile

new file mode 100644

index 00000000..298ea9e2

--- /dev/null

+++ b/docs/Makefile

@@ -0,0 +1,19 @@

+# Minimal makefile for Sphinx documentation

+#

+

+# You can set these variables from the command line.

+SPHINXOPTS =

+SPHINXBUILD = sphinx-build

+SOURCEDIR = .

+BUILDDIR = _build

+

+# Put it first so that "make" without argument is like "make help".

+help:

+ @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

+

+.PHONY: help Makefile

+

+# Catch-all target: route all unknown targets to Sphinx using the new

+# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

+%: Makefile

+ @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

\ No newline at end of file

diff --git a/docs/conf.py b/docs/conf.py

new file mode 100644

index 00000000..024451d5

--- /dev/null

+++ b/docs/conf.py

@@ -0,0 +1,186 @@

+# -*- coding: utf-8 -*-

+#

+# Configuration file for the Sphinx documentation builder.

+#

+# This file does only contain a selection of the most common options. For a

+# full list see the documentation:

+# http://www.sphinx-doc.org/en/master/config

+

+# -- Path setup --------------------------------------------------------------

+

+# If extensions (or modules to document with autodoc) are in another directory,

+# add these directories to sys.path here. If the directory is relative to the

+# documentation root, use os.path.abspath to make it absolute, like shown here.

+#

+from datetime import date

+import os

+import sys

+sys.path.insert(0, os.path.abspath('..'))

+

+

+# -- Project information -----------------------------------------------------

+

+project = u'MSAL Python'

+copyright = u'{0}, Microsoft'.format(date.today().year)

+author = u'Microsoft'

+

+# The short X.Y version

+from msal import __version__ as version

+# The full version, including alpha/beta/rc tags

+release = version

+

+

+# -- General configuration ---------------------------------------------------

+

+# If your documentation needs a minimal Sphinx version, state it here.

+#

+# needs_sphinx = '1.0'

+

+# Add any Sphinx extension module names here, as strings. They can be

+# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

+# ones.

+extensions = [

+ 'sphinx.ext.autodoc', # This seems need to be the first extension to load

+ 'sphinx.ext.githubpages',

+ 'sphinx_paramlinks',

+]

+

+# Add any paths that contain templates here, relative to this directory.

+templates_path = ['_templates']

+

+# The suffix(es) of source filenames.

+# You can specify multiple suffix as a list of string:

+#

+# source_suffix = ['.rst', '.md']

+source_suffix = '.rst'

+

+# The master toctree document.

+master_doc = 'index'

+

+# The language for content autogenerated by Sphinx. Refer to documentation

+# for a list of supported languages.

+#

+# This is also used if you do content translation via gettext catalogs.

+# Usually you set "language" from the command line for these cases.

+language = None

+

+# List of patterns, relative to source directory, that match files and

+# directories to ignore when looking for source files.

+# This pattern also affects html_static_path and html_extra_path.

+exclude_patterns = [u'_build', 'Thumbs.db', '.DS_Store']

+

+# The name of the Pygments (syntax highlighting) style to use.

+pygments_style = None

+

+

+# -- Options for HTML output -------------------------------------------------

+

+# The theme to use for HTML and HTML Help pages. See the documentation for

+# a list of builtin themes.

+#

+# html_theme = 'alabaster'

+html_theme = 'furo'

+

+# Theme options are theme-specific and customize the look and feel of a theme

+# further. For a list of options available for each theme, see the

+# documentation.

+#

+html_theme_options = {

+ "light_css_variables": {

+ "font-stack": "'Segoe UI', SegoeUI, 'Helvetica Neue', Helvetica, Arial, sans-serif",

+ "font-stack--monospace": "SFMono-Regular, Consolas, 'Liberation Mono', Menlo, Courier, monospace",

+ },

+}

+

+# Add any paths that contain custom static files (such as style sheets) here,

+# relative to this directory. They are copied after the builtin static files,

+# so a file named "default.css" will overwrite the builtin "default.css".

+html_static_path = ['_static']

+

+# Custom sidebar templates, must be a dictionary that maps document names

+# to template names.

+#

+# The default sidebars (for documents that don't match any pattern) are

+# defined by theme itself. Builtin themes are using these templates by

+# default: ``['localtoc.html', 'relations.html', 'sourcelink.html',

+# 'searchbox.html']``.

+#

+# html_sidebars = {}

+

+

+# -- Options for HTMLHelp output ---------------------------------------------

+

+# Output file base name for HTML help builder.

+htmlhelp_basename = 'MSALPythondoc'

+

+

+# -- Options for LaTeX output ------------------------------------------------

+

+latex_elements = {

+ # The paper size ('letterpaper' or 'a4paper').

+ #

+ # 'papersize': 'letterpaper',

+

+ # The font size ('10pt', '11pt' or '12pt').

+ #

+ # 'pointsize': '10pt',

+

+ # Additional stuff for the LaTeX preamble.

+ #

+ # 'preamble': '',

+

+ # Latex figure (float) alignment

+ #

+ # 'figure_align': 'htbp',

+}

+

+# Grouping the document tree into LaTeX files. List of tuples

+# (source start file, target name, title,

+# author, documentclass [howto, manual, or own class]).

+latex_documents = [

+ (master_doc, 'MSALPython.tex', u'MSAL Python Documentation',

+ u'Microsoft', 'manual'),

+]

+

+

+# -- Options for manual page output ------------------------------------------

+

+# One entry per manual page. List of tuples

+# (source start file, name, description, authors, manual section).

+man_pages = [

+ (master_doc, 'msalpython', u'MSAL Python Documentation',

+ [author], 1)

+]

+

+

+# -- Options for Texinfo output ----------------------------------------------

+

+# Grouping the document tree into Texinfo files. List of tuples

+# (source start file, target name, title, author,

+# dir menu entry, description, category)

+texinfo_documents = [

+ (master_doc, 'MSALPython', u'MSAL Python Documentation',

+ author, 'MSALPython', 'One line description of project.',

+ 'Miscellaneous'),

+]

+

+

+# -- Options for Epub output -------------------------------------------------

+

+# Bibliographic Dublin Core info.

+epub_title = project

+

+# The unique identifier of the text. This can be a ISBN number

+# or the project homepage.

+#

+# epub_identifier = ''

+

+# A unique identification for the text.

+#

+# epub_uid = ''

+

+# A list of files that should not be packed into the epub file.

+epub_exclude_files = ['search.html']

+

+

+# -- Extension configuration -------------------------------------------------

diff --git a/docs/daemon-app.svg b/docs/daemon-app.svg

new file mode 100644

index 00000000..8f1af659

--- /dev/null

+++ b/docs/daemon-app.svg

@@ -0,0 +1,1074 @@

+

+

+

+

\ No newline at end of file

diff --git a/docs/index.rst b/docs/index.rst

new file mode 100644

index 00000000..e608fe6b

--- /dev/null

+++ b/docs/index.rst

@@ -0,0 +1,132 @@

+MSAL Python Documentation

+=========================

+

+.. toctree::

+ :maxdepth: 2

+ :caption: Contents:

+ :hidden:

+

+ index

+

+..

+ Comment: Perhaps because of the theme, only the first level sections will show in TOC,

+ regardless of maxdepth setting.

+

+You can find high level conceptual documentations in the project

+`README `_.

+

+Scenarios

+=========

+

+There are many `different application scenarios `_.

+MSAL Python supports some of them.

+**The following diagram serves as a map. Locate your application scenario on the map.**

+**If the corresponding icon is clickable, it will bring you to an MSAL Python sample for that scenario.**

+

+* Most authentication scenarios acquire tokens on behalf of signed-in users.

+

+ .. raw:: html

+

+

+

+

+

+

+* There are also daemon apps. In these scenarios, applications acquire tokens on behalf of themselves with no user.

+

+ .. raw:: html

+

+

+

+

+

+

+* There are other less common samples, such for ADAL-to-MSAL migration,

+ `available inside the project code base

+ `_.

+

+

+API Reference

+=============

+

+The following section is the API Reference of MSAL Python.

+The API Reference is like a dictionary. You **read this API section when and only when**:

+

+* You already followed our sample(s) above and have your app up and running,

+ but want to know more on how you could tweak the authentication experience

+ by using other optional parameters (there are plenty of them!)

+* You read the MSAL Python source code and found a helper function that is useful to you,

+ then you would want to double check whether that helper is documented below.

+ Only documented APIs are considered part of the MSAL Python public API,

+ which are guaranteed to be backward-compatible in MSAL Python 1.x series.

+ Undocumented internal helpers are subject to change anytime, without prior notice.

+

+.. note::

+

+ Only APIs and their parameters documented in this section are part of public API,

+ with guaranteed backward compatibility for the entire 1.x series.

+

+ Other modules in the source code are all considered as internal helpers,

+ which could change at anytime in the future, without prior notice.

+

+MSAL proposes a clean separation between

+`public client applications and confidential client applications

+`_.

+

+They are implemented as two separated classes,

+with different methods for different authentication scenarios.

+

+ClientApplication

+=================

+

+.. autoclass:: msal.ClientApplication

+ :members:

+ :inherited-members:

+

+ .. automethod:: __init__

+

+PublicClientApplication

+=======================

+

+.. autoclass:: msal.PublicClientApplication

+ :members:

+

+ .. automethod:: __init__

+

+ConfidentialClientApplication

+=============================

+

+.. autoclass:: msal.ConfidentialClientApplication

+ :members:

+

+

+TokenCache

+==========

+

+One of the parameters accepted by

+both `PublicClientApplication` and `ConfidentialClientApplication`

+is the `TokenCache`.

+

+.. autoclass:: msal.TokenCache

+ :members:

+

+You can subclass it to add new behavior, such as, token serialization.

+See `SerializableTokenCache` for example.

+

+.. autoclass:: msal.SerializableTokenCache

+ :members:

diff --git a/docs/make.bat b/docs/make.bat

new file mode 100644

index 00000000..27f573b8

--- /dev/null

+++ b/docs/make.bat

@@ -0,0 +1,35 @@

+@ECHO OFF

+

+pushd %~dp0

+

+REM Command file for Sphinx documentation

+

+if "%SPHINXBUILD%" == "" (

+ set SPHINXBUILD=sphinx-build

+)

+set SOURCEDIR=.

+set BUILDDIR=_build

+

+if "%1" == "" goto help

+

+%SPHINXBUILD% >NUL 2>NUL

+if errorlevel 9009 (

+ echo.

+ echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

+ echo.installed, then set the SPHINXBUILD environment variable to point

+ echo.to the full path of the 'sphinx-build' executable. Alternatively you

+ echo.may add the Sphinx directory to PATH.

+ echo.

+ echo.If you don't have Sphinx installed, grab it from

+ echo.http://sphinx-doc.org/

+ exit /b 1

+)

+

+%SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

+goto end

+

+:help

+%SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

+

+:end

+popd

diff --git a/docs/requirements.txt b/docs/requirements.txt

new file mode 100644

index 00000000..0fd0c33a

--- /dev/null

+++ b/docs/requirements.txt

@@ -0,0 +1,3 @@

+furo

+sphinx-paramlinks

+-r ../requirements.txt

diff --git a/docs/scenarios-with-users.svg b/docs/scenarios-with-users.svg

new file mode 100644

index 00000000..fffdec47

--- /dev/null

+++ b/docs/scenarios-with-users.svg

@@ -0,0 +1,2789 @@

+

+

+

+

\ No newline at end of file

diff --git a/docs/thumbnail.png b/docs/thumbnail.png

new file mode 100644

index 00000000..e1606e91

Binary files /dev/null and b/docs/thumbnail.png differ

diff --git a/msal/__init__.py b/msal/__init__.py

new file mode 100644

index 00000000..4e2faaed

--- /dev/null

+++ b/msal/__init__.py

@@ -0,0 +1,36 @@

+#------------------------------------------------------------------------------

+#

+# Copyright (c) Microsoft Corporation.

+# All rights reserved.

+#

+# This code is licensed under the MIT License.

+#

+# Permission is hereby granted, free of charge, to any person obtaining a copy

+# of this software and associated documentation files(the "Software"), to deal

+# in the Software without restriction, including without limitation the rights

+# to use, copy, modify, merge, publish, distribute, sublicense, and / or sell

+# copies of the Software, and to permit persons to whom the Software is

+# furnished to do so, subject to the following conditions :

+#

+# The above copyright notice and this permission notice shall be included in

+# all copies or substantial portions of the Software.

+#

+# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT.IN NO EVENT SHALL THE

+# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

+# THE SOFTWARE.

+#

+#------------------------------------------------------------------------------

+

+from .application import (

+ __version__,

+ ClientApplication,

+ ConfidentialClientApplication,

+ PublicClientApplication,

+ )

+from .oauth2cli.oidc import Prompt

+from .token_cache import TokenCache, SerializableTokenCache

+

diff --git a/msal/__main__.py b/msal/__main__.py

new file mode 100644

index 00000000..51b2e740

--- /dev/null

+++ b/msal/__main__.py

@@ -0,0 +1,255 @@

+# It is currently shipped inside msal library.

+# Pros: It is always available wherever msal is installed.

+# Cons: Its 3rd-party dependencies (if any) may become msal's dependency.

+"""MSAL Python Tester

+

+Usage 1: Run it on the fly.

+ python -m msal

+

+Usage 2: Build an all-in-one executable file for bug bash.

+ shiv -e msal.__main__._main -o msaltest-on-os-name.pyz .

+ Note: We choose to not define a console script to avoid name conflict.

+"""

+import base64, getpass, json, logging, sys, msal

+

+# This tester can test scenarios of these apps

+_AZURE_CLI = "04b07795-8ddb-461a-bbee-02f9e1bf7b46"

+_VISUAL_STUDIO = "04f0c124-f2bc-4f59-8241-bf6df9866bbd"

+_WHITE_BOARD = "95de633a-083e-42f5-b444-a4295d8e9314"

+_KNOWN_APPS = {

+ _AZURE_CLI: {

+ "client_id": _AZURE_CLI,

+ "name": "Azure CLI (Correctly configured for MSA-PT)",

+ "path_in_redirect_uri": None,

+ },

+ _VISUAL_STUDIO: {

+ "client_id": _VISUAL_STUDIO,

+ "name": "Visual Studio (Correctly configured for MSA-PT)",

+ "path_in_redirect_uri": None,

+ },

+ _WHITE_BOARD: {

+ "client_id": _WHITE_BOARD,

+ "name": "Whiteboard Services (Non MSA-PT app. Accepts AAD & MSA accounts.)",

+ },

+}

+

+def print_json(blob):

+ print(json.dumps(blob, indent=2, sort_keys=True))

+

+def _input_boolean(message):

+ return input(

+ "{} (N/n/F/f or empty means False, otherwise it is True): ".format(message)

+ ) not in ('N', 'n', 'F', 'f', '')

+

+def _input(message, default=None):

+ return input(message.format(default=default)).strip() or default

+

+def _select_options(

+ options, header="Your options:", footer=" Your choice? ", option_renderer=str,

+ accept_nonempty_string=False,

+ ):

+ assert options, "options must not be empty"

+ if header:

+ print(header)

+ for i, o in enumerate(options, start=1):

+ print(" {}: {}".format(i, option_renderer(o)))

+ if accept_nonempty_string:

+ print(" Or you can just type in your input.")

+ while True:

+ raw_data = input(footer)

+ try:

+ choice = int(raw_data)

+ if 1 <= choice <= len(options):

+ return options[choice - 1]

+ except ValueError:

+ if raw_data and accept_nonempty_string:

+ return raw_data

+

+def _input_scopes():

+ scopes = _select_options([

+ "https://graph.microsoft.com/.default",

+ "https://management.azure.com/.default",

+ "User.Read",

+ "User.ReadBasic.All",

+ ],

+ header="Select a scope (multiple scopes can only be input by manually typing them, delimited by space):",

+ accept_nonempty_string=True,

+ ).split() # It also converts the input string(s) into a list

+ if "https://pas.windows.net/CheckMyAccess/Linux/.default" in scopes:

+ raise ValueError("SSH Cert scope shall be tested by its dedicated functions")

+ return scopes

+

+def _select_account(app):

+ accounts = app.get_accounts()

+ if accounts:

+ return _select_options(

+ accounts,

+ option_renderer=lambda a: a["username"],

+ header="Account(s) already signed in inside MSAL Python:",

+ )

+ else:

+ print("No account available inside MSAL Python. Use other methods to acquire token first.")

+

+def _acquire_token_silent(app):

+ """acquire_token_silent() - with an account already signed into MSAL Python."""

+ account = _select_account(app)

+ if account:

+ print_json(app.acquire_token_silent(

+ _input_scopes(),

+ account=account,

+ force_refresh=_input_boolean("Bypass MSAL Python's token cache?"),

+ ))

+

+def _get_redirect_uri_path(app):

+ if app._enable_broker:

+ return None

+ if "path_in_redirect_uri" in _KNOWN_APPS.get(app.client_id, {}):

+ return _KNOWN_APPS[app.client_id]["path_in_redirect_uri"]

+ return input("What is the path in this app's redirect_uri?")

+

+def _acquire_token_interactive(app, scopes=None, data=None):

+ """acquire_token_interactive() - User will be prompted if app opts to do select_account."""

+ scopes = scopes or _input_scopes() # Let user input scope param before less important prompt and login_hint

+ prompt = _select_options([

+ {"value": None, "description": "Unspecified. Proceed silently with a default account (if any), fallback to prompt."},

+ {"value": "none", "description": "none. Proceed silently with a default account (if any), or error out."},

+ {"value": "select_account", "description": "select_account. Prompt with an account picker."},

+ ],

+ option_renderer=lambda o: o["description"],

+ header="Prompt behavior?")["value"]

+ if prompt == "select_account":

+ login_hint = None # login_hint is unnecessary when prompt=select_account

+ else:

+ raw_login_hint = _select_options(

+ [None] + [a["username"] for a in app.get_accounts()],

+ header="login_hint? (If you have multiple signed-in sessions in browser/broker, and you specify a login_hint to match one of them, you will bypass the account picker.)",

+ accept_nonempty_string=True,

+ )

+ login_hint = raw_login_hint["username"] if isinstance(raw_login_hint, dict) else raw_login_hint

+ result = app.acquire_token_interactive(

+ scopes,

+ parent_window_handle=app.CONSOLE_WINDOW_HANDLE, # This test app is a console app

+ enable_msa_passthrough=app.client_id in [ # Apps are expected to set this right

+ _AZURE_CLI, _VISUAL_STUDIO,

+ ], # Here this test app mimics the setting for some known MSA-PT apps

+ prompt=prompt, login_hint=login_hint, data=data or {},

+ path=_get_redirect_uri_path(app),

+ )

+ if login_hint and "id_token_claims" in result:

+ signed_in_user = result.get("id_token_claims", {}).get("preferred_username")

+ if signed_in_user != login_hint:

+ logging.warning('Signed-in user "%s" does not match login_hint', signed_in_user)

+ print_json(result)

+ return result

+

+def _acquire_token_by_username_password(app):

+ """acquire_token_by_username_password() - See constraints here: https://docs.microsoft.com/en-us/azure/active-directory/develop/msal-authentication-flows#constraints-for-ropc"""

+ print_json(app.acquire_token_by_username_password(

+ _input("username: "), getpass.getpass("password: "), scopes=_input_scopes()))

+

+_JWK1 = """{"kty":"RSA", "n":"2tNr73xwcj6lH7bqRZrFzgSLj7OeLfbn8216uOMDHuaZ6TEUBDN8Uz0ve8jAlKsP9CQFCSVoSNovdE-fs7c15MxEGHjDcNKLWonznximj8pDGZQjVdfK-7mG6P6z-lgVcLuYu5JcWU_PeEqIKg5llOaz-qeQ4LEDS4T1D2qWRGpAra4rJX1-kmrWmX_XIamq30C9EIO0gGuT4rc2hJBWQ-4-FnE1NXmy125wfT3NdotAJGq5lMIfhjfglDbJCwhc8Oe17ORjO3FsB5CLuBRpYmP7Nzn66lRY3Fe11Xz8AEBl3anKFSJcTvlMnFtu3EpD-eiaHfTgRBU7CztGQqVbiQ", "e":"AQAB"}"""

+_SSH_CERT_DATA = {"token_type": "ssh-cert", "key_id": "key1", "req_cnf": _JWK1}

+_SSH_CERT_SCOPE = ["https://pas.windows.net/CheckMyAccess/Linux/.default"]

+

+def _acquire_ssh_cert_silently(app):

+ """Acquire an SSH Cert silently- This typically only works with Azure CLI"""

+ account = _select_account(app)

+ if account:

+ result = app.acquire_token_silent(

+ _SSH_CERT_SCOPE,

+ account,

+ data=_SSH_CERT_DATA,

+ force_refresh=_input_boolean("Bypass MSAL Python's token cache?"),

+ )

+ print_json(result)

+ if result and result.get("token_type") != "ssh-cert":

+ logging.error("Unable to acquire an ssh-cert.")

+

+def _acquire_ssh_cert_interactive(app):

+ """Acquire an SSH Cert interactively - This typically only works with Azure CLI"""

+ result = _acquire_token_interactive(app, scopes=_SSH_CERT_SCOPE, data=_SSH_CERT_DATA)

+ if result.get("token_type") != "ssh-cert":

+ logging.error("Unable to acquire an ssh-cert")

+

+_POP_KEY_ID = 'AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA-AAAAAAAA' # Fake key with a certain format and length

+_RAW_REQ_CNF = json.dumps({"kid": _POP_KEY_ID, "xms_ksl": "sw"})

+_POP_DATA = { # Sampled from Azure CLI's plugin connectedk8s

+ 'token_type': 'pop',

+ 'key_id': _POP_KEY_ID,

+ "req_cnf": base64.urlsafe_b64encode(_RAW_REQ_CNF.encode('utf-8')).decode('utf-8').rstrip('='),

+ # Note: Sending _RAW_REQ_CNF without base64 encoding would result in an http 500 error

+} # See also https://github.com/Azure/azure-cli-extensions/blob/main/src/connectedk8s/azext_connectedk8s/_clientproxyutils.py#L86-L92

+

+def _acquire_pop_token_interactive(app):

+ """Acquire a POP token interactively - This typically only works with Azure CLI"""

+ POP_SCOPE = ['6256c85f-0aad-4d50-b960-e6e9b21efe35/.default'] # KAP 1P Server App Scope, obtained from https://github.com/Azure/azure-cli-extensions/pull/4468/files#diff-a47efa3186c7eb4f1176e07d0b858ead0bf4a58bfd51e448ee3607a5b4ef47f6R116

+ result = _acquire_token_interactive(app, scopes=POP_SCOPE, data=_POP_DATA)

+ print_json(result)

+ if result.get("token_type") != "pop":

+ logging.error("Unable to acquire a pop token")

+

+def _remove_account(app):

+ """remove_account() - Invalidate account and/or token(s) from cache, so that acquire_token_silent() would be reset"""

+ account = _select_account(app)

+ if account:

+ app.remove_account(account)

+ print('Account "{}" and/or its token(s) are signed out from MSAL Python'.format(account["username"]))

+

+def _exit(app):

+ """Exit"""

+ bug_link = (

+ "https://identitydivision.visualstudio.com/Engineering/_queries/query/79b3a352-a775-406f-87cd-a487c382a8ed/"

+ if app._enable_broker else

+ "https://github.com/AzureAD/microsoft-authentication-library-for-python/issues/new/choose"

+ )

+ print("Bye. If you found a bug, please report it here: {}".format(bug_link))

+ sys.exit()

+

+def _main():

+ print("Welcome to the Msal Python {} Tester (Experimental)\n".format(msal.__version__))

+ chosen_app = _select_options(

+ list(_KNOWN_APPS.values()),

+ option_renderer=lambda a: a["name"],

+ header="Impersonate this app (or you can type in the client_id of your own app)",

+ accept_nonempty_string=True)

+ allow_broker = _input_boolean("Allow broker?")

+ enable_debug_log = _input_boolean("Enable MSAL Python's DEBUG log?")

+ enable_pii_log = _input_boolean("Enable PII in broker's log?") if allow_broker and enable_debug_log else False

+ app = msal.PublicClientApplication(

+ chosen_app["client_id"] if isinstance(chosen_app, dict) else chosen_app,

+ authority=_select_options([

+ "https://login.microsoftonline.com/common",

+ "https://login.microsoftonline.com/organizations",

+ "https://login.microsoftonline.com/microsoft.onmicrosoft.com",

+ "https://login.microsoftonline.com/msidlab4.onmicrosoft.com",

+ "https://login.microsoftonline.com/consumers",

+ ],

+ header="Input authority (Note that MSA-PT apps would NOT use the /common authority)",

+ accept_nonempty_string=True,

+ ),

+ allow_broker=allow_broker,

+ enable_pii_log=enable_pii_log,

+ )

+ if enable_debug_log:

+ logging.basicConfig(level=logging.DEBUG)

+ while True:

+ func = _select_options([

+ _acquire_token_silent,

+ _acquire_token_interactive,

+ _acquire_token_by_username_password,

+ _acquire_ssh_cert_silently,

+ _acquire_ssh_cert_interactive,

+ _acquire_pop_token_interactive,

+ _remove_account,

+ _exit,

+ ], option_renderer=lambda f: f.__doc__, header="MSAL Python APIs:")

+ try:

+ func(app)

+ except ValueError as e:

+ logging.error("Invalid input: %s", e)

+ except KeyboardInterrupt: # Useful for bailing out a stuck interactive flow

+ print("Aborted")

+

+if __name__ == "__main__":

+ _main()

+

diff --git a/msal/application.py b/msal/application.py

new file mode 100644

index 00000000..82372cc9

--- /dev/null

+++ b/msal/application.py

@@ -0,0 +1,2166 @@

+import functools

+import json

+import time

+try: # Python 2

+ from urlparse import urljoin

+except: # Python 3

+ from urllib.parse import urljoin

+import logging

+import sys

+import warnings

+from threading import Lock

+import os

+

+from .oauth2cli import Client, JwtAssertionCreator

+from .oauth2cli.oidc import decode_part

+from .authority import Authority, WORLD_WIDE

+from .mex import send_request as mex_send_request

+from .wstrust_request import send_request as wst_send_request

+from .wstrust_response import *

+from .token_cache import TokenCache, _get_username

+import msal.telemetry

+from .region import _detect_region

+from .throttled_http_client import ThrottledHttpClient

+from .cloudshell import _is_running_in_cloud_shell

+

+

+# The __init__.py will import this. Not the other way around.

+__version__ = "1.24.1" # When releasing, also check and bump our dependencies's versions if needed

+

+logger = logging.getLogger(__name__)

+_AUTHORITY_TYPE_CLOUDSHELL = "CLOUDSHELL"

+

+def extract_certs(public_cert_content):

+ # Parses raw public certificate file contents and returns a list of strings

+ # Usage: headers = {"x5c": extract_certs(open("my_cert.pem").read())}

+ public_certificates = re.findall(

+ r'-----BEGIN CERTIFICATE-----(?P[^-]+)-----END CERTIFICATE-----',

+ public_cert_content, re.I)

+ if public_certificates:

+ return [cert.strip() for cert in public_certificates]

+ # The public cert tags are not found in the input,

+ # let's make best effort to exclude a private key pem file.

+ if "PRIVATE KEY" in public_cert_content:

+ raise ValueError(

+ "We expect your public key but detect a private key instead")

+ return [public_cert_content.strip()]

+

+

+def _merge_claims_challenge_and_capabilities(capabilities, claims_challenge):

+ # Represent capabilities as {"access_token": {"xms_cc": {"values": capabilities}}}

+ # and then merge/add it into incoming claims

+ if not capabilities:

+ return claims_challenge

+ claims_dict = json.loads(claims_challenge) if claims_challenge else {}

+ for key in ["access_token"]: # We could add "id_token" if we'd decide to

+ claims_dict.setdefault(key, {}).update(xms_cc={"values": capabilities})

+ return json.dumps(claims_dict)

+

+

+def _str2bytes(raw):

+ # A conversion based on duck-typing rather than six.text_type

+ try:

+ return raw.encode(encoding="utf-8")

+ except:

+ return raw

+

+

+def _pii_less_home_account_id(home_account_id):

+ parts = home_account_id.split(".") # It could contain one or two parts

+ parts[0] = "********"

+ return ".".join(parts)

+

+

+def _clean_up(result):

+ if isinstance(result, dict):

+ if "_msalruntime_telemetry" in result or "_msal_python_telemetry" in result:

+ result["msal_telemetry"] = json.dumps({ # Telemetry as an opaque string

+ "msalruntime_telemetry": result.get("_msalruntime_telemetry"),

+ "msal_python_telemetry": result.get("_msal_python_telemetry"),

+ }, separators=(",", ":"))

+ return {

+ k: result[k] for k in result

+ if k != "refresh_in" # MSAL handled refresh_in, customers need not

+ and not k.startswith('_') # Skim internal properties

+ }

+ return result # It could be None

+

+

+def _preferred_browser():

+ """Register Edge and return a name suitable for subsequent webbrowser.get(...)

+ when appropriate. Otherwise return None.

+ """

+ # On Linux, only Edge will provide device-based Conditional Access support

+ if sys.platform != "linux": # On other platforms, we have no browser preference

+ return None

+ browser_path = "/usr/bin/microsoft-edge" # Use a full path owned by sys admin

+ # Note: /usr/bin/microsoft-edge, /usr/bin/microsoft-edge-stable, etc.

+ # are symlinks that point to the actual binaries which are found under

+ # /opt/microsoft/msedge/msedge or /opt/microsoft/msedge-beta/msedge.

+ # Either method can be used to detect an Edge installation.

+ user_has_no_preference = "BROWSER" not in os.environ

+ user_wont_mind_edge = "microsoft-edge" in os.environ.get("BROWSER", "") # Note:

+ # BROWSER could contain "microsoft-edge" or "/path/to/microsoft-edge".

+ # Python documentation (https://docs.python.org/3/library/webbrowser.html)

+ # does not document the name being implicitly register,

+ # so there is no public API to know whether the ENV VAR browser would work.

+ # Therefore, we would not bother examine the env var browser's type.

+ # We would just register our own Edge instance.

+ if (user_has_no_preference or user_wont_mind_edge) and os.path.exists(browser_path):

+ try:

+ import webbrowser # Lazy import. Some distro may not have this.

+ browser_name = "msal-edge" # Avoid popular name "microsoft-edge"

+ # otherwise `BROWSER="microsoft-edge"; webbrowser.get("microsoft-edge")`

+ # would return a GenericBrowser instance which won't work.

+ try:

+ registration_available = isinstance(

+ webbrowser.get(browser_name), webbrowser.BackgroundBrowser)

+ except webbrowser.Error:

+ registration_available = False

+ if not registration_available:

+ logger.debug("Register %s with %s", browser_name, browser_path)

+ # By registering our own browser instance with our own name,

+ # rather than populating a process-wide BROWSER enn var,

+ # this approach does not have side effect on non-MSAL code path.

+ webbrowser.register( # Even double-register happens to work fine

+ browser_name, None, webbrowser.BackgroundBrowser(browser_path))

+ return browser_name

+ except ImportError:

+ pass # We may still proceed

+ return None

+

+

+class _ClientWithCcsRoutingInfo(Client):

+

+ def initiate_auth_code_flow(self, **kwargs):

+ if kwargs.get("login_hint"): # eSTS could have utilized this as-is, but nope

+ kwargs["X-AnchorMailbox"] = "UPN:%s" % kwargs["login_hint"]

+ return super(_ClientWithCcsRoutingInfo, self).initiate_auth_code_flow(

+ client_info=1, # To be used as CSS Routing info

+ **kwargs)

+

+ def obtain_token_by_auth_code_flow(

+ self, auth_code_flow, auth_response, **kwargs):

+ # Note: the obtain_token_by_browser() is also covered by this

+ assert isinstance(auth_code_flow, dict) and isinstance(auth_response, dict)

+ headers = kwargs.pop("headers", {})

+ client_info = json.loads(

+ decode_part(auth_response["client_info"])

+ ) if auth_response.get("client_info") else {}

+ if "uid" in client_info and "utid" in client_info:

+ # Note: The value of X-AnchorMailbox is also case-insensitive

+ headers["X-AnchorMailbox"] = "Oid:{uid}@{utid}".format(**client_info)

+ return super(_ClientWithCcsRoutingInfo, self).obtain_token_by_auth_code_flow(

+ auth_code_flow, auth_response, headers=headers, **kwargs)

+

+ def obtain_token_by_username_password(self, username, password, **kwargs):

+ headers = kwargs.pop("headers", {})

+ headers["X-AnchorMailbox"] = "upn:{}".format(username)

+ return super(_ClientWithCcsRoutingInfo, self).obtain_token_by_username_password(

+ username, password, headers=headers, **kwargs)

+

+

+class ClientApplication(object):

+ """You do not usually directly use this class. Use its subclasses instead:

+ :class:`PublicClientApplication` and :class:`ConfidentialClientApplication`.

+ """

+ ACQUIRE_TOKEN_SILENT_ID = "84"

+ ACQUIRE_TOKEN_BY_REFRESH_TOKEN = "85"

+ ACQUIRE_TOKEN_BY_USERNAME_PASSWORD_ID = "301"

+ ACQUIRE_TOKEN_ON_BEHALF_OF_ID = "523"

+ ACQUIRE_TOKEN_BY_DEVICE_FLOW_ID = "622"

+ ACQUIRE_TOKEN_FOR_CLIENT_ID = "730"

+ ACQUIRE_TOKEN_BY_AUTHORIZATION_CODE_ID = "832"

+ ACQUIRE_TOKEN_INTERACTIVE = "169"

+ GET_ACCOUNTS_ID = "902"

+ REMOVE_ACCOUNT_ID = "903"

+

+ ATTEMPT_REGION_DISCOVERY = True # "TryAutoDetect"

+

+ def __init__(

+ self, client_id,

+ client_credential=None, authority=None, validate_authority=True,

+ token_cache=None,

+ http_client=None,

+ verify=True, proxies=None, timeout=None,

+ client_claims=None, app_name=None, app_version=None,

+ client_capabilities=None,

+ azure_region=None, # Note: We choose to add this param in this base class,

+ # despite it is currently only needed by ConfidentialClientApplication.

+ # This way, it holds the same positional param place for PCA,

+ # when we would eventually want to add this feature to PCA in future.

+ exclude_scopes=None,

+ http_cache=None,

+ instance_discovery=None,

+ allow_broker=None,

+ enable_pii_log=None,

+ ):

+ """Create an instance of application.

+

+ :param str client_id: Your app has a client_id after you register it on AAD.

+

+ :param Union[str, dict] client_credential:

+ For :class:`PublicClientApplication`, you simply use `None` here.

+ For :class:`ConfidentialClientApplication`,

+ it can be a string containing client secret,

+ or an X509 certificate container in this form::

+

+ {

+ "private_key": "...-----BEGIN PRIVATE KEY-----... in PEM format",

+ "thumbprint": "A1B2C3D4E5F6...",

+ "public_certificate": "...-----BEGIN CERTIFICATE-----... (Optional. See below.)",

+ "passphrase": "Passphrase if the private_key is encrypted (Optional. Added in version 1.6.0)",

+ }

+

+ MSAL Python requires a "private_key" in PEM format.

+ If your cert is in a PKCS12 (.pfx) format, you can also

+ `convert it to PEM and get the thumbprint `_.

+

+ The thumbprint is available in your app's registration in Azure Portal.

+ Alternatively, you can `calculate the thumbprint `_.

+

+ *Added in version 0.5.0*:

+ public_certificate (optional) is public key certificate

+ which will be sent through 'x5c' JWT header only for

+ subject name and issuer authentication to support cert auto rolls.

+

+ Per `specs `_,

+ "the certificate containing

+ the public key corresponding to the key used to digitally sign the

+ JWS MUST be the first certificate. This MAY be followed by

+ additional certificates, with each subsequent certificate being the

+ one used to certify the previous one."

+ However, your certificate's issuer may use a different order.

+ So, if your attempt ends up with an error AADSTS700027 -

+ "The provided signature value did not match the expected signature value",

+ you may try use only the leaf cert (in PEM/str format) instead.

+

+ *Added in version 1.13.0*:

+ It can also be a completely pre-signed assertion that you've assembled yourself.

+ Simply pass a container containing only the key "client_assertion", like this::

+

+ {

+ "client_assertion": "...a JWT with claims aud, exp, iss, jti, nbf, and sub..."

+ }

+

+ :param dict client_claims:

+ *Added in version 0.5.0*:

+ It is a dictionary of extra claims that would be signed by

+ by this :class:`ConfidentialClientApplication` 's private key.

+ For example, you can use {"client_ip": "x.x.x.x"}.

+ You may also override any of the following default claims::

+

+ {

+ "aud": the_token_endpoint,

+ "iss": self.client_id,

+ "sub": same_as_issuer,

+ "exp": now + 10_min,

+ "iat": now,

+ "jti": a_random_uuid

+ }

+

+ :param str authority:

+ A URL that identifies a token authority. It should be of the format

+ ``https://login.microsoftonline.com/your_tenant``

+ By default, we will use ``https://login.microsoftonline.com/common``

+

+ *Changed in version 1.17*: you can also use predefined constant

+ and a builder like this::

+

+ from msal.authority import (

+ AuthorityBuilder,

+ AZURE_US_GOVERNMENT, AZURE_CHINA, AZURE_PUBLIC)

+ my_authority = AuthorityBuilder(AZURE_PUBLIC, "contoso.onmicrosoft.com")

+ # Now you get an equivalent of

+ # "https://login.microsoftonline.com/contoso.onmicrosoft.com"

+

+ # You can feed such an authority to msal's ClientApplication

+ from msal import PublicClientApplication

+ app = PublicClientApplication("my_client_id", authority=my_authority, ...)

+

+ :param bool validate_authority: (optional) Turns authority validation

+ on or off. This parameter default to true.

+ :param TokenCache cache:

+ Sets the token cache used by this ClientApplication instance.

+ By default, an in-memory cache will be created and used.

+ :param http_client: (optional)

+ Your implementation of abstract class HttpClient

+ Defaults to a requests session instance.

+ Since MSAL 1.11.0, the default session would be configured

+ to attempt one retry on connection error.

+ If you are providing your own http_client,

+ it will be your http_client's duty to decide whether to perform retry.

+

+ :param verify: (optional)

+ It will be passed to the

+ `verify parameter in the underlying requests library

+ `_

+ This does not apply if you have chosen to pass your own Http client

+ :param proxies: (optional)

+ It will be passed to the

+ `proxies parameter in the underlying requests library

+ `_

+ This does not apply if you have chosen to pass your own Http client

+ :param timeout: (optional)

+ It will be passed to the

+ `timeout parameter in the underlying requests library

+ `_

+ This does not apply if you have chosen to pass your own Http client

+ :param app_name: (optional)

+ You can provide your application name for Microsoft telemetry purposes.

+ Default value is None, means it will not be passed to Microsoft.

+ :param app_version: (optional)

+ You can provide your application version for Microsoft telemetry purposes.

+ Default value is None, means it will not be passed to Microsoft.

+ :param list[str] client_capabilities: (optional)

+ Allows configuration of one or more client capabilities, e.g. ["CP1"].

+

+ Client capability is meant to inform the Microsoft identity platform

+ (STS) what this client is capable for,

+ so STS can decide to turn on certain features.

+ For example, if client is capable to handle *claims challenge*,

+ STS can then issue CAE access tokens to resources

+ knowing when the resource emits *claims challenge*

+ the client will be capable to handle.

+

+ Implementation details:

+ Client capability is implemented using "claims" parameter on the wire,

+ for now.

+ MSAL will combine them into

+ `claims parameter `_

+ which you will later provide via one of the acquire-token request.

+

+ :param str azure_region:

+ AAD provides regional endpoints for apps to opt in

+ to keep their traffic remain inside that region.

+

+ As of 2021 May, regional service is only available for

+ ``acquire_token_for_client()`` sent by any of the following scenarios:

+

+ 1. An app powered by a capable MSAL

+ (MSAL Python 1.12+ will be provisioned)

+

+ 2. An app with managed identity, which is formerly known as MSI.

+ (However MSAL Python does not support managed identity,

+ so this one does not apply.)

+

+ 3. An app authenticated by

+ `Subject Name/Issuer (SNI) `_.

+

+ 4. An app which already onboard to the region's allow-list.

+

+ This parameter defaults to None, which means region behavior remains off.

+

+ App developer can opt in to a regional endpoint,

+ by provide its region name, such as "westus", "eastus2".

+ You can find a full list of regions by running

+ ``az account list-locations -o table``, or referencing to

+ `this doc `_.

+

+ An app running inside Azure Functions and Azure VM can use a special keyword

+ ``ClientApplication.ATTEMPT_REGION_DISCOVERY`` to auto-detect region.

+

+ .. note::

+

+ Setting ``azure_region`` to non-``None`` for an app running

+ outside of Azure Function/VM could hang indefinitely.

+

+ You should consider opting in/out region behavior on-demand,

+ by loading ``azure_region=None`` or ``azure_region="westus"``

+ or ``azure_region=True`` (which means opt-in and auto-detect)

+ from your per-deployment configuration, and then do

+ ``app = ConfidentialClientApplication(..., azure_region=azure_region)``.

+

+ Alternatively, you can configure a short timeout,

+ or provide a custom http_client which has a short timeout.

+ That way, the latency would be under your control,

+ but still less performant than opting out of region feature.

+

+ New in version 1.12.0.

+

+ :param list[str] exclude_scopes: (optional)

+ Historically MSAL hardcodes `offline_access` scope,

+ which would allow your app to have prolonged access to user's data.

+ If that is unnecessary or undesirable for your app,

+ now you can use this parameter to supply an exclusion list of scopes,

+ such as ``exclude_scopes = ["offline_access"]``.

+

+ :param dict http_cache:

+ MSAL has long been caching tokens in the ``token_cache``.

+ Recently, MSAL also introduced a concept of ``http_cache``,

+ by automatically caching some finite amount of non-token http responses,

+ so that *long-lived*

+ ``PublicClientApplication`` and ``ConfidentialClientApplication``

+ would be more performant and responsive in some situations.

+

+ This ``http_cache`` parameter accepts any dict-like object.

+ If not provided, MSAL will use an in-memory dict.

+

+ If your app is a command-line app (CLI),

+ you would want to persist your http_cache across different CLI runs.

+ The following recipe shows a way to do so::

+

+ # Just add the following lines at the beginning of your CLI script

+ import sys, atexit, pickle

+ http_cache_filename = sys.argv[0] + ".http_cache"

+ try:

+ with open(http_cache_filename, "rb") as f:

+ persisted_http_cache = pickle.load(f) # Take a snapshot

+ except (

+ FileNotFoundError, # Or IOError in Python 2

+ pickle.UnpicklingError, # A corrupted http cache file

+ ):

+ persisted_http_cache = {} # Recover by starting afresh

+ atexit.register(lambda: pickle.dump(

+ # When exit, flush it back to the file.

+ # It may occasionally overwrite another process's concurrent write,

+ # but that is fine. Subsequent runs will reach eventual consistency.

+ persisted_http_cache, open(http_cache_file, "wb")))

+

+ # And then you can implement your app as you normally would

+ app = msal.PublicClientApplication(

+ "your_client_id",

+ ...,

+ http_cache=persisted_http_cache, # Utilize persisted_http_cache

+ ...,

+ #token_cache=..., # You may combine the old token_cache trick

+ # Please refer to token_cache recipe at

+ # https://msal-python.readthedocs.io/en/latest/#msal.SerializableTokenCache

+ )

+ app.acquire_token_interactive(["your", "scope"], ...)

+

+ Content inside ``http_cache`` are cheap to obtain.

+ There is no need to share them among different apps.

+

+ Content inside ``http_cache`` will contain no tokens nor

+ Personally Identifiable Information (PII). Encryption is unnecessary.

+

+ New in version 1.16.0.

+

+ :param boolean instance_discovery:

+ Historically, MSAL would connect to a central endpoint located at

+ ``https://login.microsoftonline.com`` to acquire some metadata,

+ especially when using an unfamiliar authority.

+ This behavior is known as Instance Discovery.

+

+ This parameter defaults to None, which enables the Instance Discovery.

+

+ If you know some authorities which you allow MSAL to operate with as-is,

+ without involving any Instance Discovery, the recommended pattern is::

+

+ known_authorities = frozenset([ # Treat your known authorities as const

+ "https://contoso.com/adfs", "https://login.azs/foo"])

+ ...

+ authority = "https://contoso.com/adfs" # Assuming your app will use this

+ app1 = PublicClientApplication(

+ "client_id",

+ authority=authority,

+ # Conditionally disable Instance Discovery for known authorities

+ instance_discovery=authority not in known_authorities,

+ )

+

+ If you do not know some authorities beforehand,

+ yet still want MSAL to accept any authority that you will provide,

+ you can use a ``False`` to unconditionally disable Instance Discovery.

+

+ New in version 1.19.0.

+

+ :param boolean allow_broker:

+ This parameter is NOT applicable to :class:`ConfidentialClientApplication`.

+

+ A broker is a component installed on your device.

+ Broker implicitly gives your device an identity. By using a broker,

+ your device becomes a factor that can satisfy MFA (Multi-factor authentication).

+ This factor would become mandatory

+ if a tenant's admin enables a corresponding Conditional Access (CA) policy.

+ The broker's presence allows Microsoft identity platform

+ to have higher confidence that the tokens are being issued to your device,

+ and that is more secure.

+

+ An additional benefit of broker is,

+ it runs as a long-lived process with your device's OS,

+ and maintains its own cache,

+ so that your broker-enabled apps (even a CLI)

+ could automatically SSO from a previously established signed-in session.

+

+ This parameter defaults to None, which means MSAL will not utilize a broker.

+ If this parameter is set to True,

+ MSAL will use the broker whenever possible,

+ and automatically fall back to non-broker behavior.

+ That also means your app does not need to enable broker conditionally,

+ you can always set allow_broker to True,